玩转Temporal

印象——Temporal

Temporal是一个开源的分布式工作流编排框架,用于构建可靠、可扩展的分布式应用。它源自Uber的Cadence项目,由原核心团队于2019年创建,已成为云原生工作流编排的事实标准。

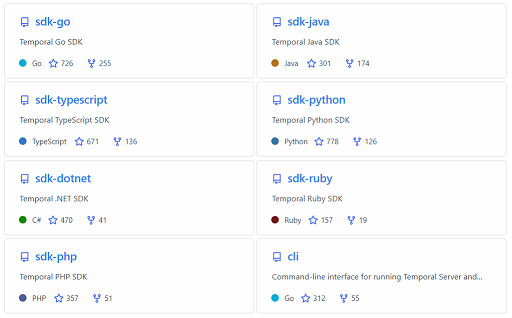

作为一个构建、运行和管理分布式工作流的平台,它提供了强大的工作流引擎和SDK,支持多种编程语言。

Temporal的核心概念包括工作流(Workflow)和活动(Activity),工作流定义了业务流程的逻辑和执行顺序,活动则表示具体的操作或任务。Temporal保证工作流的执行是可靠的,即使在面对故障和网络中断时,也能恢复并继续执行。

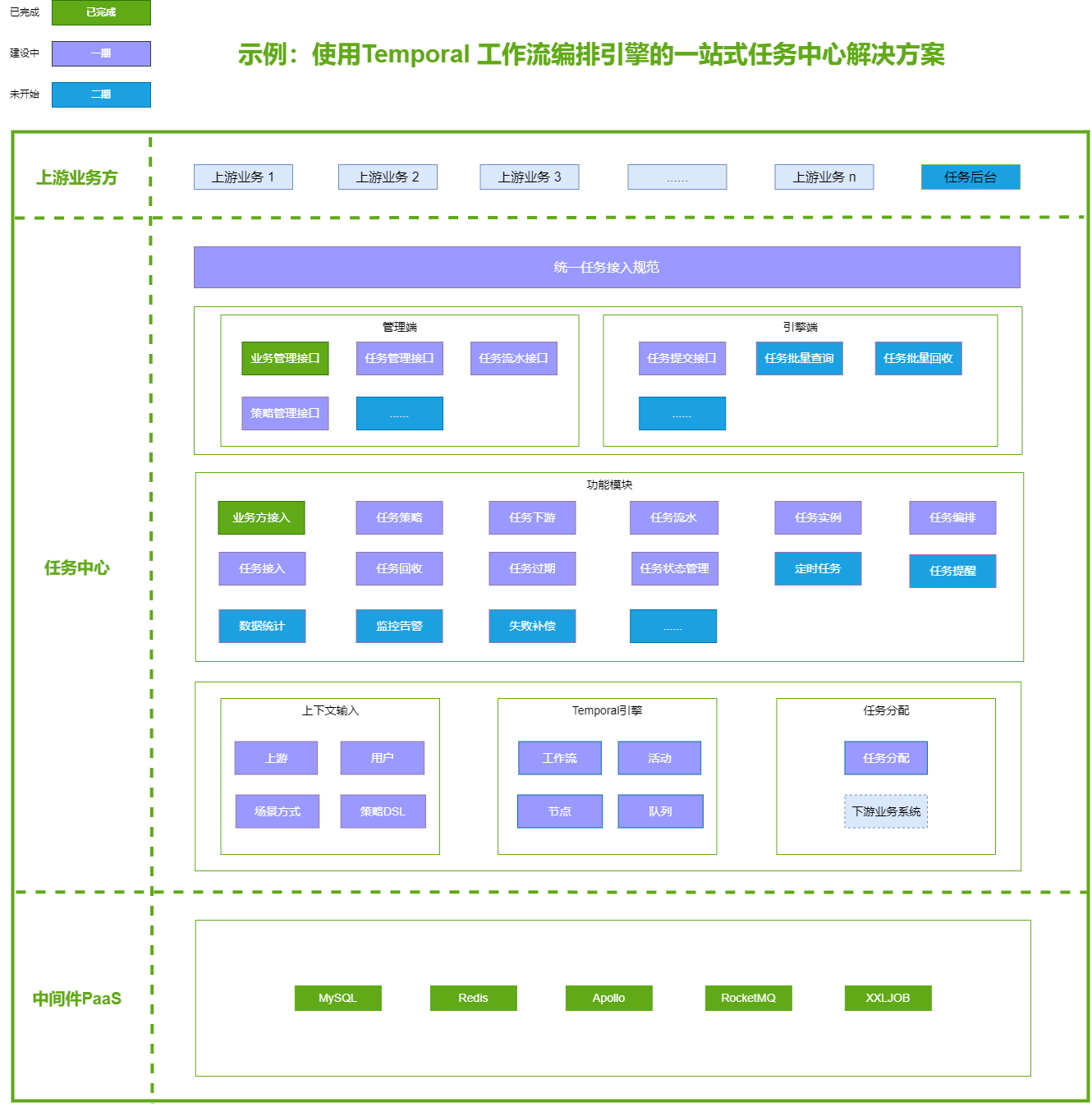

如果你在工作中遇到过以下这些场景,都可以了解下Temporal引擎。

- 跨服务、跨时间周期的复杂业务流程

- 业务工作流建模(

BPM) DevOps工作流Saga分布式事务BigData数据处理和分析PipelineServerless函数编排

这些场景看上去互相没有太大关联,但有一个共同点:需要编排(Orchestration)。Temporal解决的关键痛点,就是分布式系统中的编排问题。

What?

Temporal is the open source runtime for managing distributed applicatioin state at scale.

Why?

The most valuable, mission-critical workloads in any software company are long-running and tie together multiple services.

Requirement

Outcomes

摘自 Temporal GitHub README

Temporal is a durable execution platform that enables developers to build scalable applications without sacrificing productivity or reliability. The Temporal server executes units of application logic called Workflows in a resilient manner that automatically handles intermittent failures, and retries failed operations.

Temporal使开发人员能够在不牺牲生产力或可靠性的情况下构建可扩展的应用程序。Temporal服务器以弹性的方式执行应用程序逻辑单元,工作流,自动处理间歇故障,并重试失败的操作。

理解——编排本质

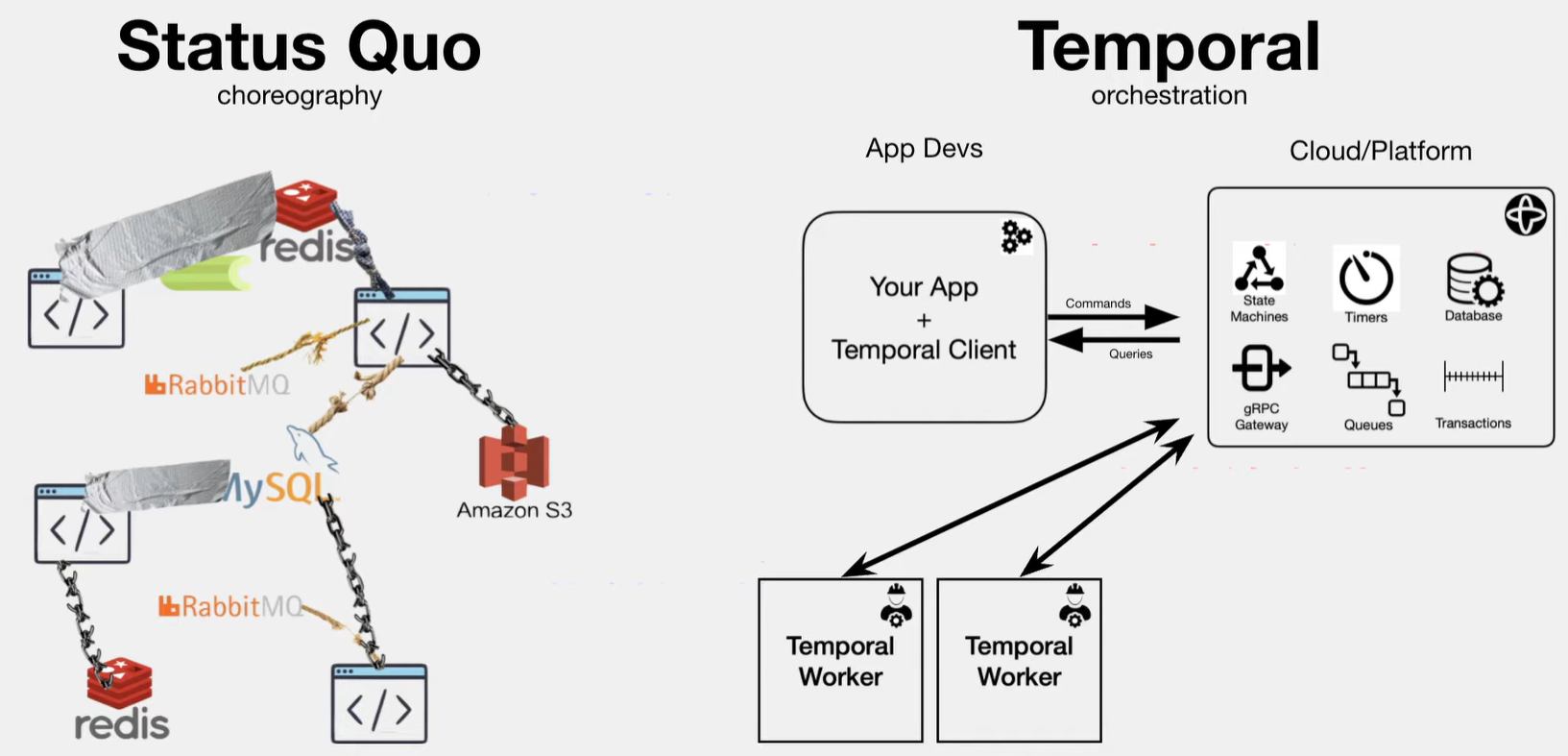

要理解编排,可以借助和Orchestration对应的另一个概念:Choreography。

choreography .vs. orchestration(图片来自网络)

choreography .vs. orchestration(图片来自网络)

看图理解下Choreography,

举个例子,在开发微服务时,经常借助消息队列MQ做事件驱动的业务逻辑,实现最终一致的、跨多个服务的数据流,这属于Choreography。而一旦引入了MQ,可能会遇到下面一系列问题,

- 消息时序问题

- 重试幂等问题

- 事件和消息链路追踪问题

- 业务逻辑过于分散的问题

- 数据已经不一致的校正对账问题

- …

在复杂微服务系统中,MQ是一个很有用的组件,但MQ不是银弹,这些问题经历过的人会懂。如果过度依赖类似MQ的方案事件驱动,但又没有足够强大的消息治理方案,整个分布式系统将嘈杂不堪,难以维护。

如果转换思路,找一个“调度主体”,让所有消息的流转,都由这个”指挥家”来控制怎么样呢?对,这就是Orchestration的含义。

Choreography是无界上下文,去中心化,每个组件只关注和发布自己的事件,完全异步,注重的是解耦;Orchestration是有界上下文,存在全局编排者,从全局建模成状态机,注重的是内聚。

Temporal的所有应用场景,都是有全局上下文、高内聚的编排场景。比如BPM有明确的流程图,DevOps和BigData Pipeline有明确的DAG,长活事务有明确的执行和补偿流程。

Temporal让我们像写正常的代码一样,可以写一段工作流代码,但并不一定是在本机执行,哪一行在什么时间yield,由服务端信令统一控制,很多分布式系统韧性问题也被封装掉了,比如分布式锁、宕机导致的重试失败、过期重试导致的数据错误,并发消息的处理时间差问题等等。

枚举——Temporal关键概念

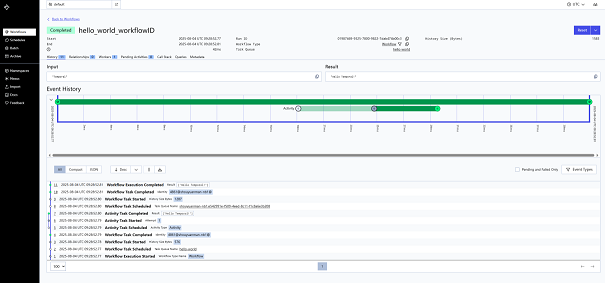

Workflow(工作流)Workflow是在编排层的关键概念,每种类型是注册到服务端的一个WorkflowType,每个WorkflowType可以创建任意多的运行实例,即WorkflowExecution,每个Execution有唯一的WorkflowID,如果是Cron/Continue-as-New, 每次执行还会有唯一的RunID。Workflow可以有环,可以嵌套子工作流(ChildWorkflow)。- 是一个长时间运行的过程,可能持续数分钟、数小时甚至数月;

- 必须是确定性的(

deterministic) —— 相同输入、相同执行顺序必须产生相同结果; - 工作流不能执行

IO操作,所有的外部操作必须交给Activity; - 工作流是可以被暂停、恢复、重放的;

- 工作流中的所有状态、步骤都会被记录在执行历史中(用于溯源和重放)。

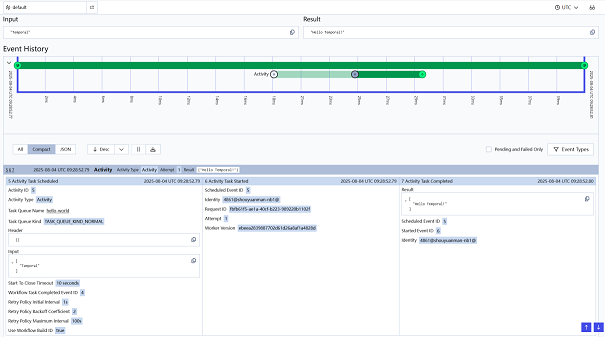

Activity(活动)Workflow所编排的对象主要就是Activity,编排Activity就像正常写代码一样,可以用if/for甚至while(true)等各种逻辑结构来调用Activity方法,只要具备确定性即可。Activity是工作流中调用的实际执行任务,比如发送邮件、调用HTTP接口、写数据库等。- 可以包含非确定性代码,如网络请求、数据库操作;

- 运行失败会自动重试;

- 可以配置超时、重试策略、幂等性保障;

Activity的执行是由Temporal Worker执行的,可以分布式并行部署。- 长时间运行的

Activity可以通过心跳机制报告其进度,这些信息可以在Temporal UI中查看。

一个工作流通常会包含多个Activity调用。

Signal(信号)对于正在运行的

WorkflowExecution,可以发送携带参数的信号,Workflow中可以等待或根据条件处理信号,动态控制工作流的执行逻辑。Signal是一种机制,允许外部事件或系统向正在运行的工作流发送数据或通知。- 工作流可以

Workflow.await()等待某个Signal; - 实现了事件驱动型工作流;

- 类似于“向正在执行的流程推送消息”;

- 比如可以在审批过程中,让前端传递参数作为

Singnal,而工作流会在收到Signal后才会继续向后执行

- 工作流可以

Worker(工作节点)Worker是真正运行工作流代码或Activity代码的程序进程。- 有两类

Worker:Workflow Worker和Activity Worker; - 通常你用同一个程序注册

workflow + activity; Worker会从Temporal Server拉取任务并执行,然后汇报执行结果;Work会启动多个线程池,用于Workflow执行Activity执行Poller(拉取任务)

- 可以弹性扩展多个实例,提高并发执行能力。

- 有两类

Task Queue(任务队列)工作流或活动的执行请求是放入某个

Task Queue中,由Worker拉取任务执行。- 每个

Worker监听一个或多个任务队列; - 支持将不同的

Workflow/Activity绑定到不同的Task Queue; - 支持路由、负载均衡。

- 每个

Temporal Server(核心引擎)Temporal的后端服务,负责协调整个系统。- 负责调度工作流、持久化历史、发送任务给

Worker; - 是高可用的服务集群,包含

Frontend、History、Matching、Worker等子模块; - 数据存储在数据库中(

MySQL/Postgres/Cassandra等); - 你只需写业务逻辑,

Server负责调度、重放、持久化等系统复杂性。

- 负责调度工作流、持久化历史、发送任务给

Query(查询)允许从外部查询工作流的当前状态,不会影响其执行状态。

- 是只读操作;

- 常用于前端

UI查询进度、状态。

Child Workflow(子工作流)一个工作流可以启动另一个工作流作为“子工作流”。

- 子工作流是独立的,有自己的执行历史;

- 可并行运行多个子工作流;

- 主工作流可以监听子工作流完成、失败等事件。

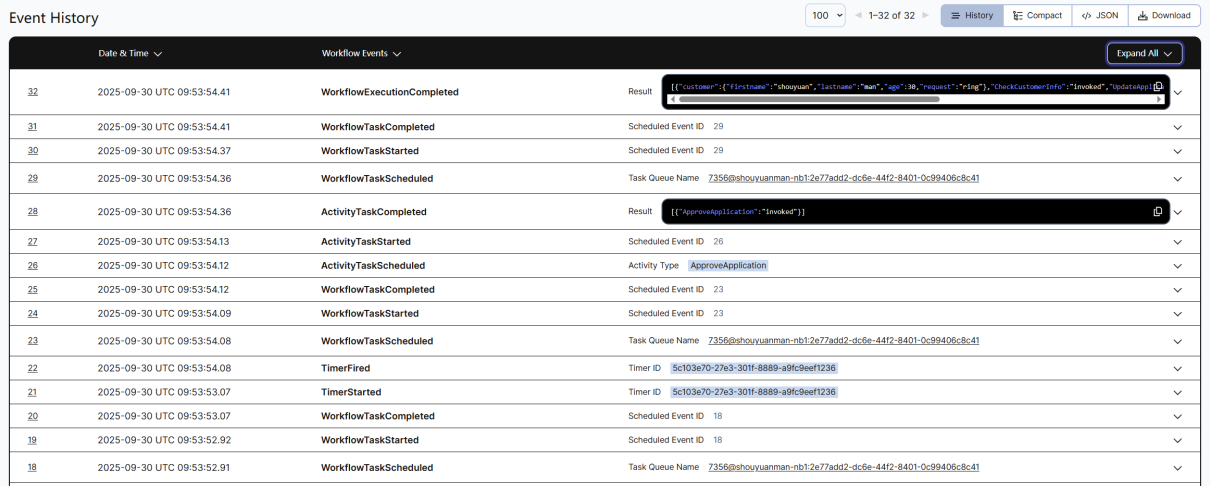

Execution History(执行历史)Temporal会将工作流的每一步操作记录为事件,形成完整的执行日志。- 用于恢复(

crash-safe)、重放、Debug; - 工作流恢复时会从历史中“回放”之前的操作,重新构建当前状态;

- 开发者无需手动管理。

- 用于恢复(

剖析——Temporal原理

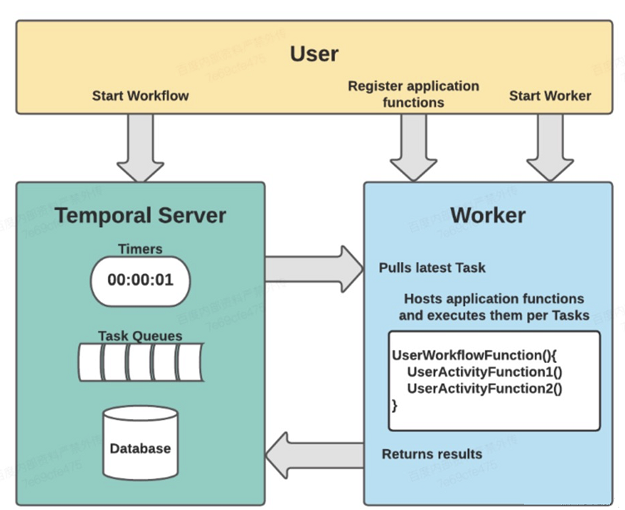

在业务模块中按规则编写Workflow流程以及其具体的Activity,并注册到worker中,启动worker,当外部用户触发Workflow,Temporal编排workflow形成⼀系列的task送到队列中,worker去队列取任务,执行后将结果返回给Temporal。

具体的流程描述

- 启动

Temporal Server; - 启动

Worker监听Temporal Server,循环获取待执行的工作流; Starter创建一个工作流,封装参数,调用sdk的api发送到Temporal Server;Worker拉取到工作流开始逻辑处理。

Workflow中编排的Activity是怎么执行的?

Workflow代码调用Activity- 你的

Workflow代码里写调用Activity的代码(比如Java里通过ActivityStub调用)。 - 这时,

Workflow Worker并不是立即执行Activity代码,而是把调用请求封装成一个任务(ActivityTask)。

- 你的

Workflow Worker将Activity调度请求发送给Temporal ServerWorkflow Worker通过RPC调用,将Activity执行请求(包括方法名、参数等)发送给Temporal Server。Temporal Server把这个Activity任务写入事件历史和对应的Task Queue。

Activity Worker监听Task Queue执行Activity- 专门跑

Activity代码的Worker(Activity Worker)会监听这个Task Queue。 - 它接收到

Activity任务后,调用对应的Activity实现代码,完成具体业务操作。

- 专门跑

Activity执行结果返回给Temporal ServerActivity执行完成后,结果(成功或失败)通过RPC返回给Temporal Server。Temporal Server更新事件历史,记录Activity执行状态和结果。

Temporal Server通知Workflow Worker继续执行Temporal Server通知Workflow Worker Activity执行完成。Workflow Worker继续重放Workflow代码,拿到Activity结果后,继续往下走。

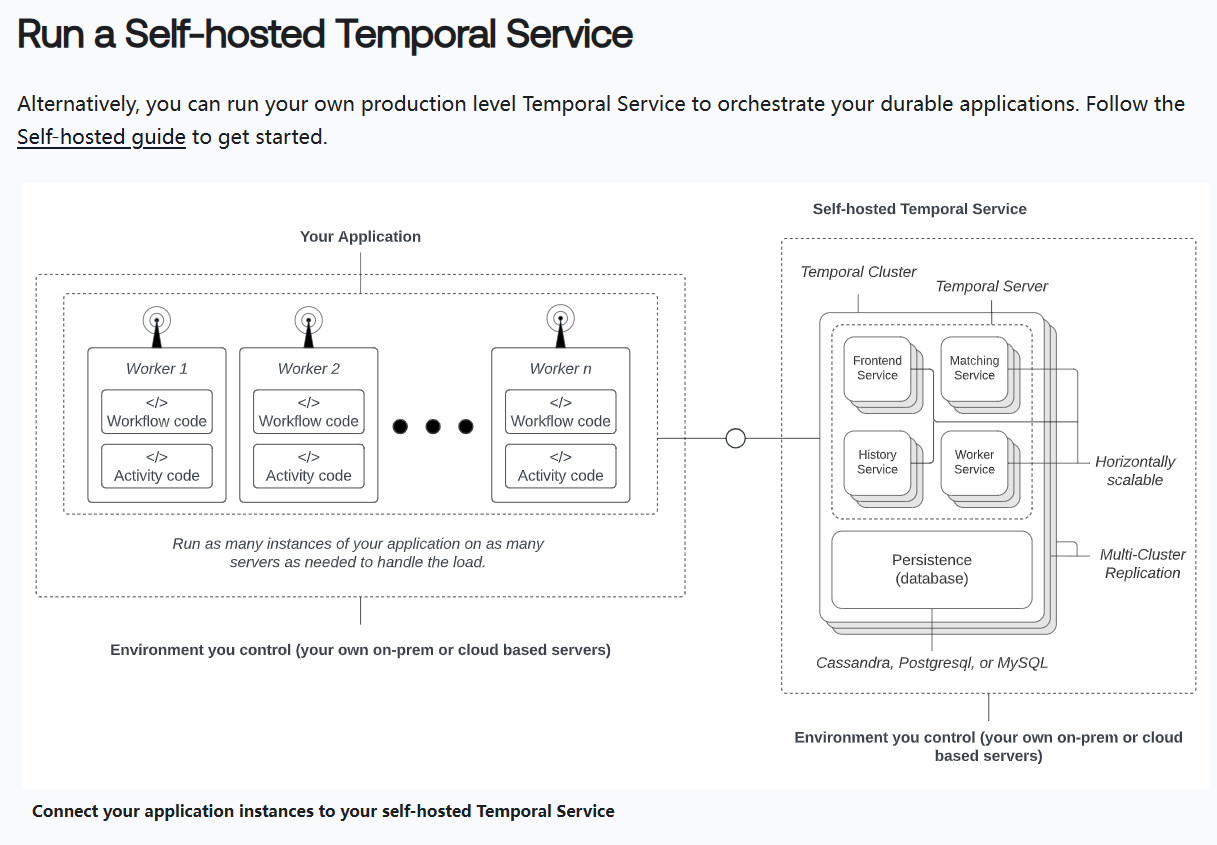

Temporal部署架构

Connect your application instances to your self-hosted Temporal Service(来自官方文档)

Connect your application instances to your self-hosted Temporal Service(来自官方文档)

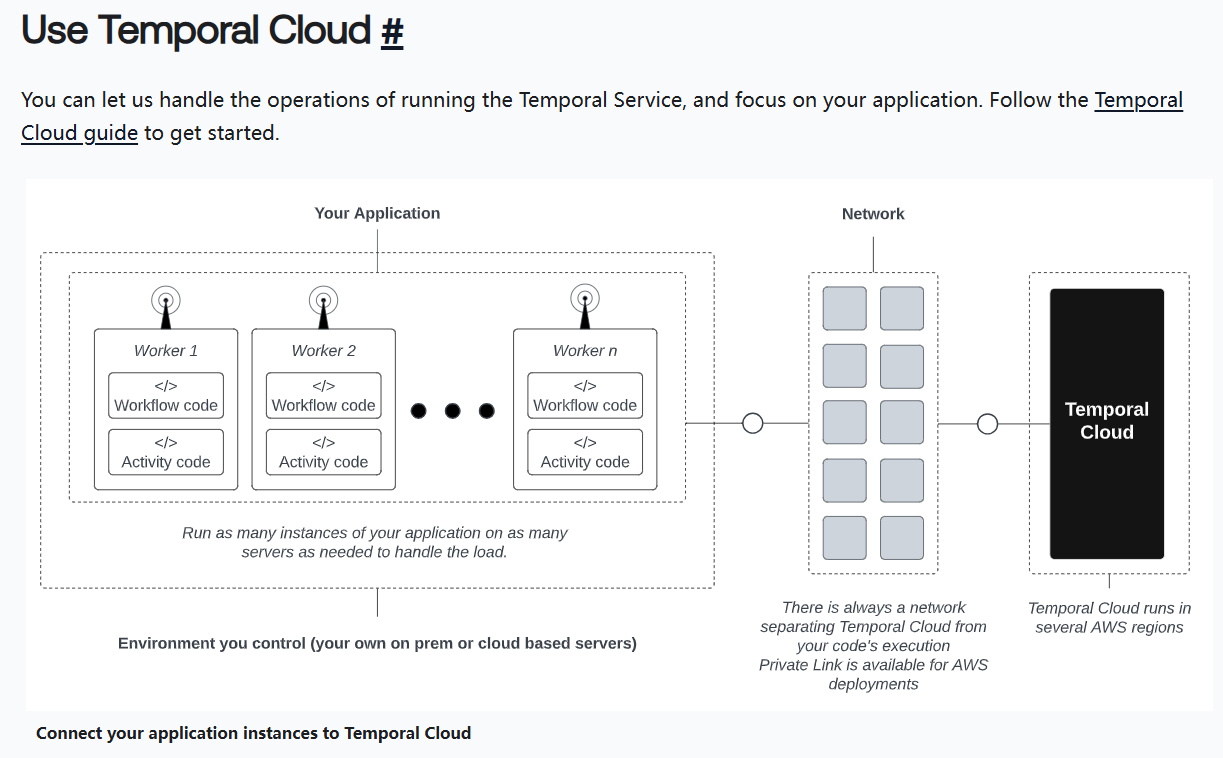

Connect your application instances to Temporal Cloud(来自官方文档)

Connect your application instances to Temporal Cloud(来自官方文档)

具体参见Temporal生产环境部署手册

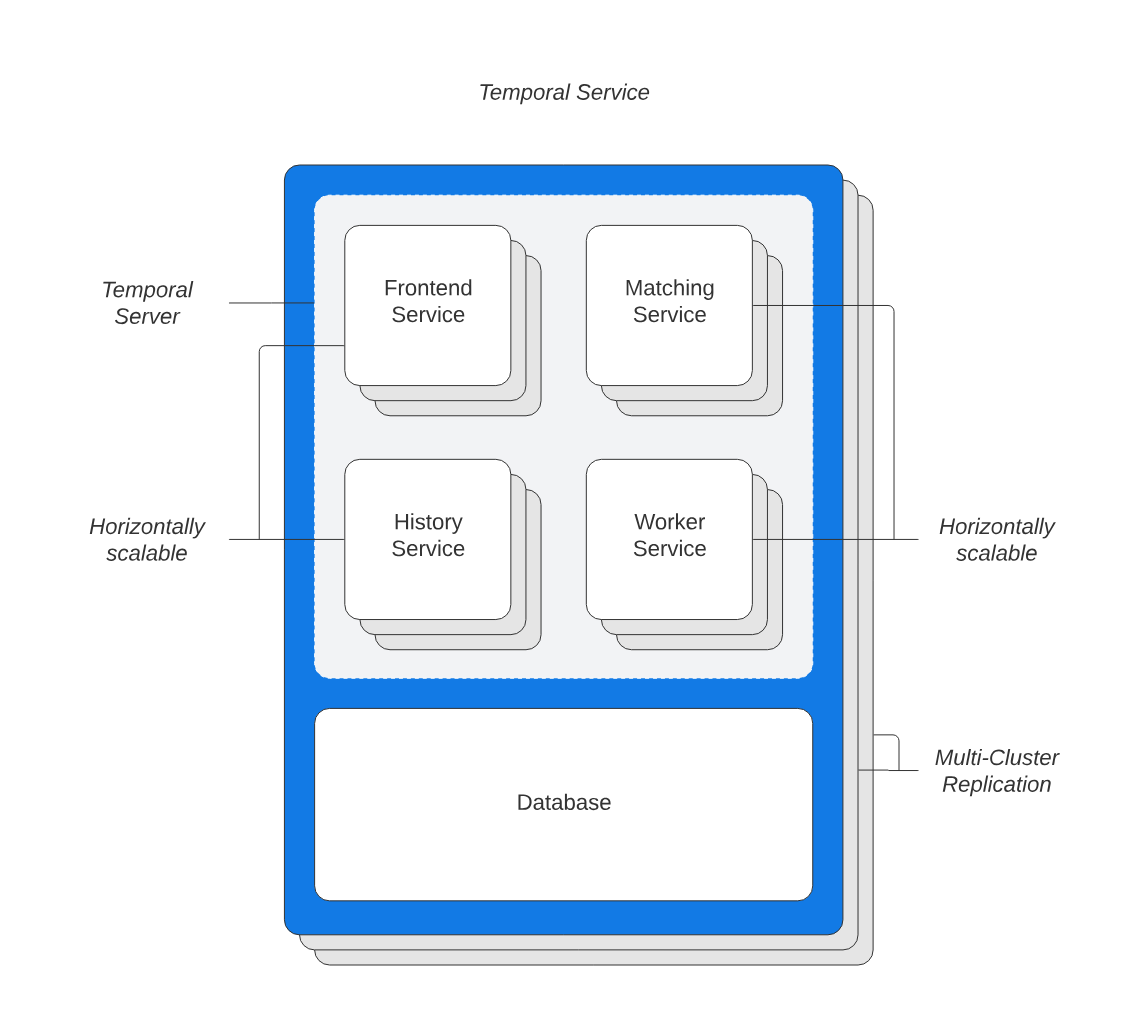

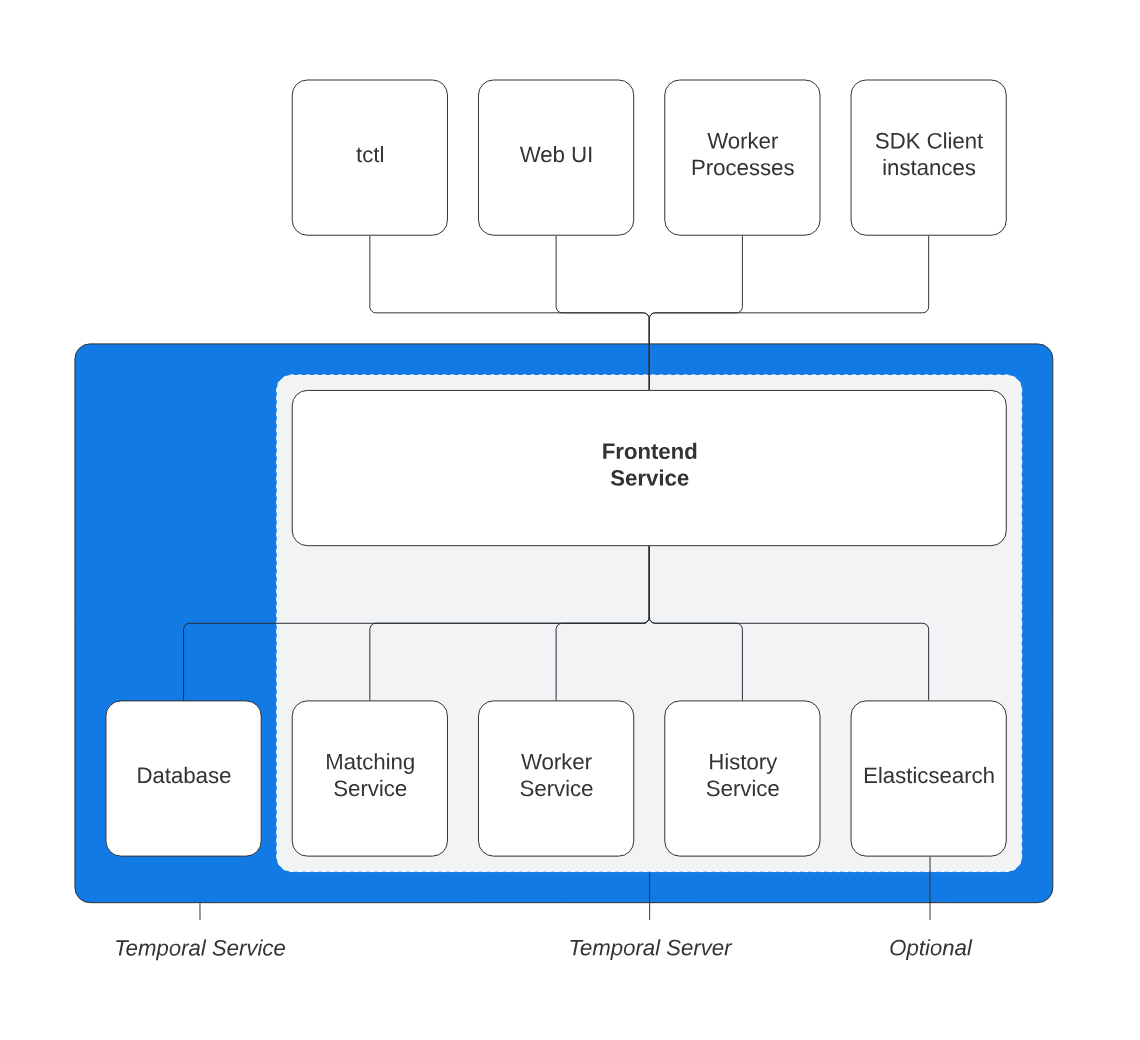

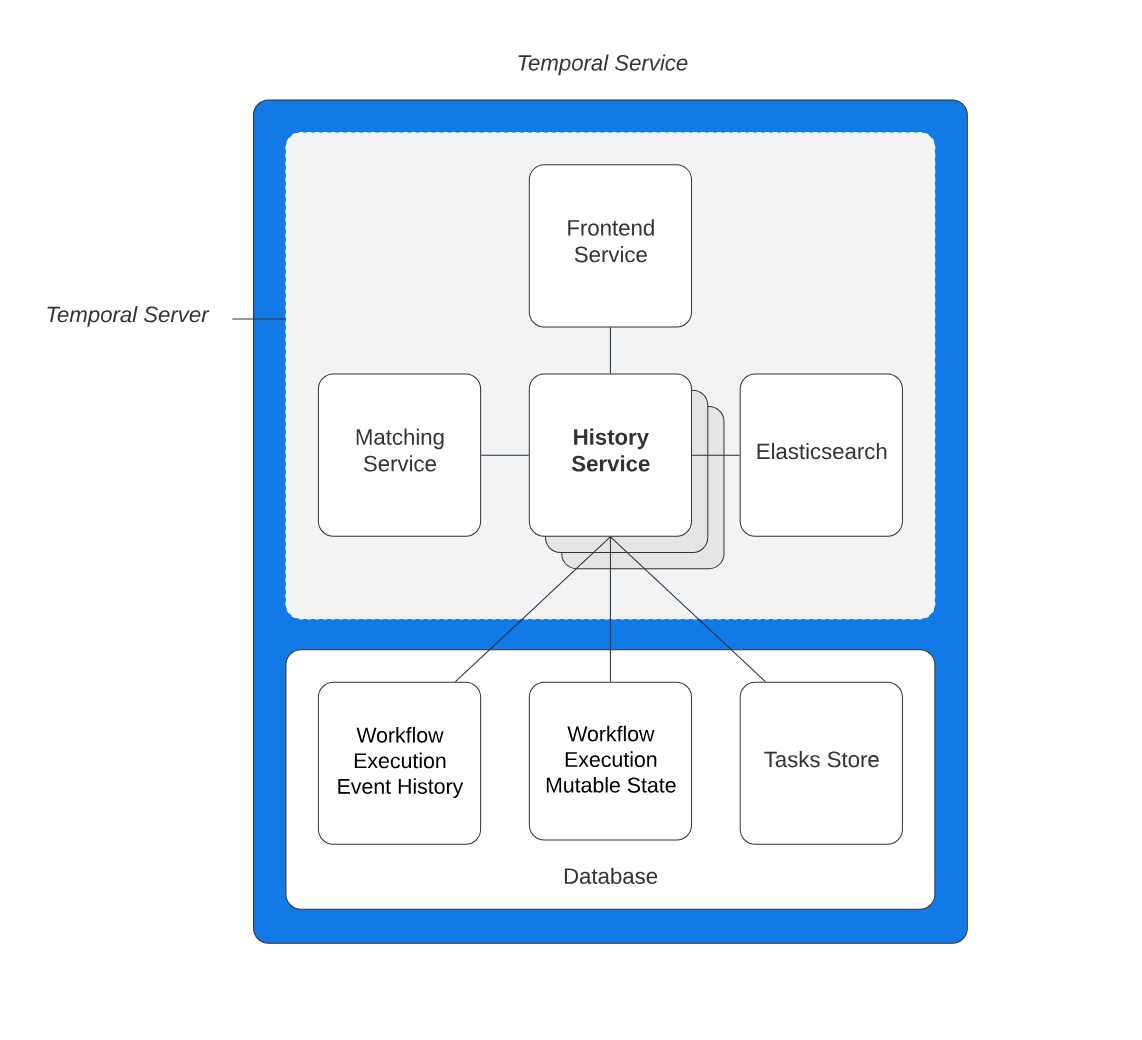

从部署角度出发,看Temporal Service核心组件

Temporal Service

Temporal集群是具备持久特性的,由四个独立的可扩展服务组成,

Frontend gateway: 限速、路由、授权History subsystem: 维护一些数据(mutable state,queues,and timers)Matching subsystem:hosts Task Queues for dispatchingWorker service:for internal background workflows例如,在实际生产部署中,每个集群可以有5个Frontend、15个History、17个Matching和3个Worker服务。

Temporal服务可以独立运行,也可以在一个或多个物理或虚拟机上组合成共享进程。对于实时(生产)环境,建议每个服务独立运行,因为每个服务都有不同的伸缩需求,并且故障排除变得更容易。History、Matching和Worker服务可以在集群内可以水平扩展,Frontend服务的可伸缩性与其他服务不同,因为它没有分片/分区,只是无状态的。

每个服务通过Ringpop的成员协议感知其他服务,包括扩展的实例。

Temporal Server

Frontend Service

前端服务是一个公开强类型Proto API的无状态网关服务。前端服务负责速率限制、授权、验证和路由请求。

请求包括以下几种,

- Domain CRUD

- External events

- Worker polls

- Visibility requests

- Admin operations via the CLI

- Multi-cluster Replication related calls from a remote Cluster

与Workflow Execution相关的每个请求都必须有一个Workflow Id,为了路由目的,该Id被散列处理。Frontend Service可以访问维护服务成员信息的散列环,包括集群中有多少节点(每个服务的实例)。所有请求的限速适用于每个host、每个namespace。

前端服务能够和Matching service, History service, Worker service, the database, and Elasticsearch(如果用到的话)通信。

它使用grpcPort 7233来托管服务处理程序。

它使用6933端口进行成员相关的通信

Temporal Frontend Service(来自官方文档)

Temporal Frontend Service(来自官方文档)

History Service

追踪工作流执行的状态

History service通过单个分片水平扩展,这些分片是在集群创建期间配置的。在集群的生命周期内,分片的数量保持不变(因此您应该计划扩展和过度供应)。

每个分片维护一些数据(例如路由id、可变的状态)和队列。History分片维护的队列有三种类型,

- 转移队列:用于将内部任务转移到Matching Service。每当需要调度一个新的工作流任务时,History服务就会以事务方式将其分派给Matching服务。

- 计时器队列:用于持久地持久化计时器。

- Replicator队列:这只用于实验性的多集群特性。

History服务与Matching服务、数据库通信。

它使用grpcPort 7234来托管服务处理程序。

它使用6934端口进行成员相关的通信。

Temporal History Service(来自官方文档)

Temporal History Service(来自官方文档)

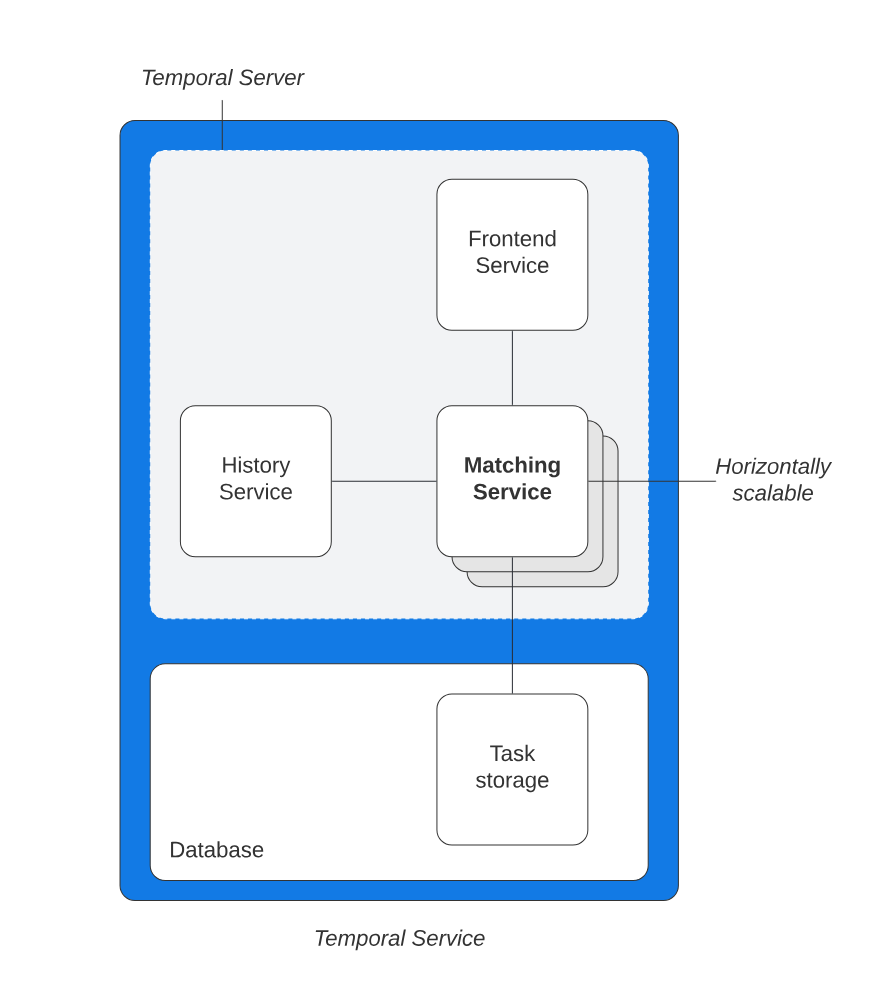

Matching Service

Matching服务负责托管任务调度的任务队列。 它负责将worker与Tasks匹配,并将新任务路由到适当的队列。该服务可以通过拥有多个实例实现内部扩展。

它与前端服务、历史服务和数据库通信。

它使用grpcPort 7235来托管服务处理程序。

它使用6935端口进行成员相关的通信。

Temporal Matching Service(来自官方文档)

Temporal Matching Service(来自官方文档)

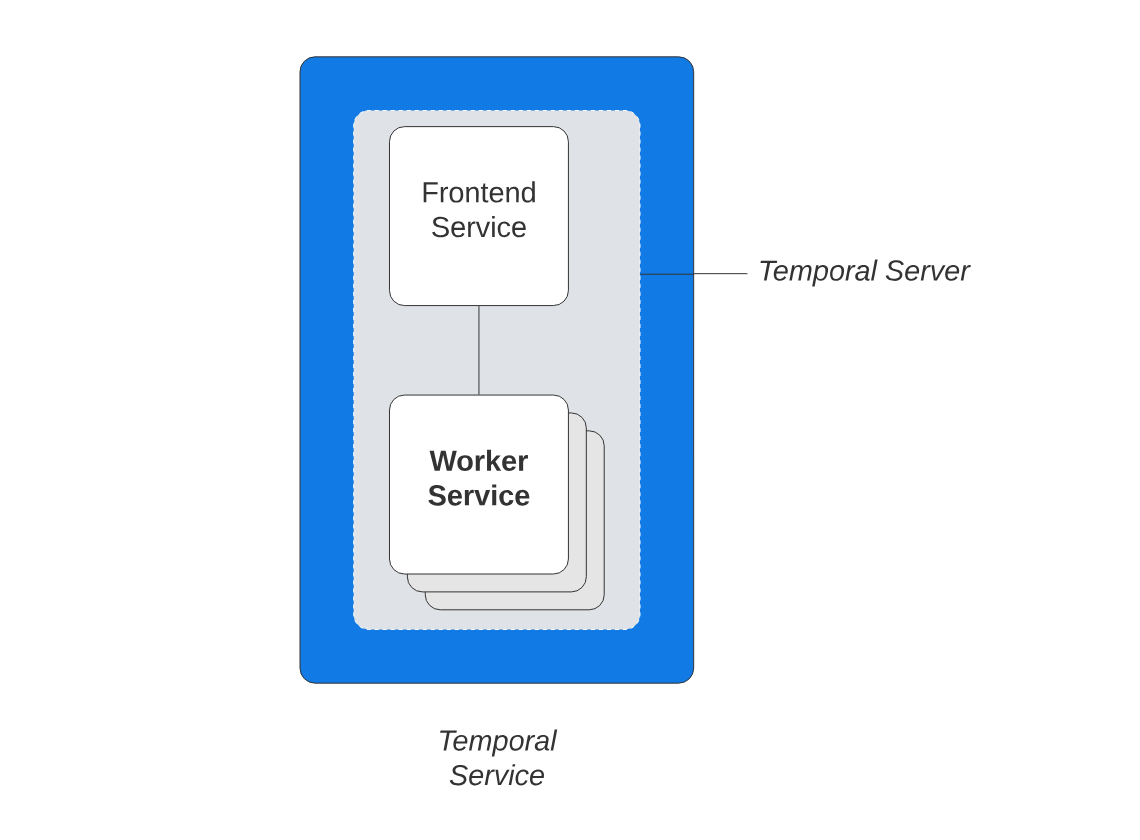

Worker Service

Worker Service为复制队列、系统工作流以及1.5.0以上版本的Kafka可视性处理器运行后台处理。

它与Frontend服务对话。

它使用grpcPort 7239来托管服务处理程序。

它使用6939端口进行成员相关的通信。

Temporal Worker Service(来自官方文档)

Temporal Worker Service(来自官方文档)

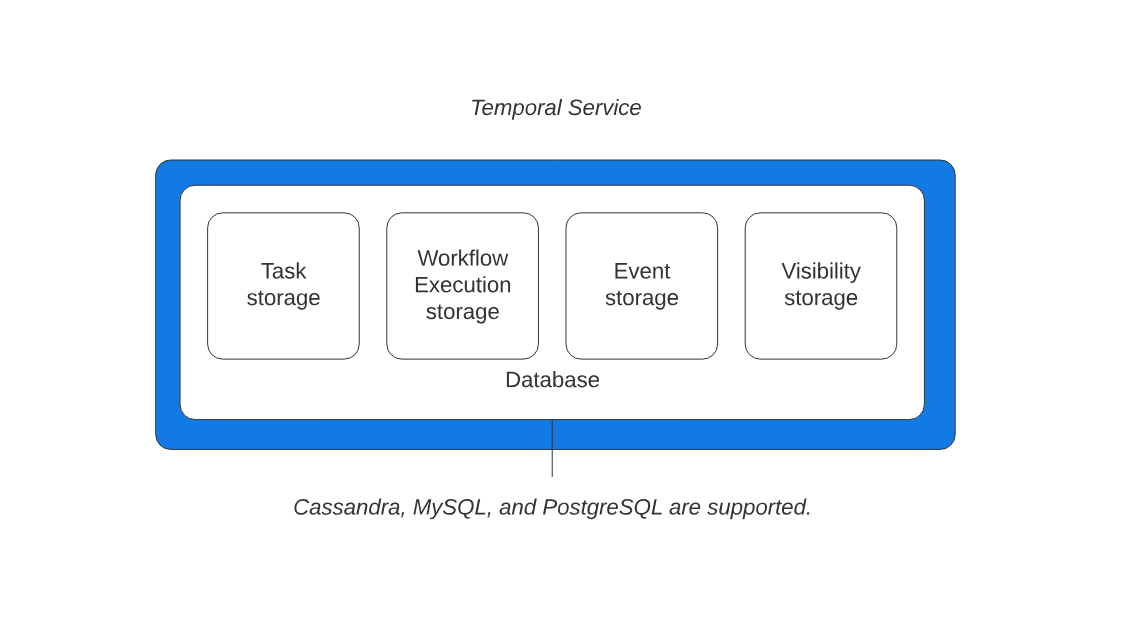

Database 持久化

数据库为系统提供存储。

支持Cassandra、MySQL和PostgreSQL模式,因此可以用作服务器的数据库。

数据库存储的数据类型如下,

- 任务:待分配的任务。

- 工作流执行的状态

- 执行表:工作流执行的可变状态的捕获。

- 历史记录表:仅追加工作流执行历史事件的日志。

- Namespace元数据:集群中各Namespace的元数据。

- 可见性数据:允许“显示所有正在运行的工作流执行”这样的操作。 对于生产环境,建议使用ElasticSearch。

Visibility 可视化

在Temporal平台中,Visibility指的是那些能够使操作员查看、过滤并搜索当前存在于Temporal服务中的Workflow执行记录的子系统与API。 Temporal服务中的可见性存储用于保存持久化的Workflow执行事件历史数据,并作为持久化存储的一部分进行配置,以便能够列出并过滤Temporal服务上存在的Workflow执行详情。

在Temporal Server v1.21版本中,您可以通过设置双可见性功能,将您的可见性存储从一个数据库迁移到另一个数据库。 从Temporal Server v1.21版本开始,对独立标准版和高级版可见性设置的支持将被弃用。请查看支持的数据库以获取更新信息。

Archival 归档

什么是Archival(归档)?

Archival功能可将Temporal服务持久化存储中的事件历史和可见性记录自动备份至自定义的二进制大对象存储。

工作流执行事件历史记录在保留期结束后会进行备份。当工作流执行状态变为已关闭时,可见性记录会立即进行备份。

归档功能确保工作流执行数据能够按需持久化存储,同时避免对Temporal服务的持久化存储造成过大压力。 此功能有助于合规性和故障排除。

Temporal的存档功能被视为实验性功能,不适用常规版本管理和支持政策。

通过Docker运行Temporal时,不支持归档功能。手动安装系统或通过Helm图表部署时,该功能默认禁用。可通过配置文件启用。

Configuration 配置

Temporal服务配置是您自托管Temporal服务的设置与配置详情,采用YAML格式定义。在部署自托管Temporal服务时,您必须定义其配置。

有关使用Temporal Cloud的详细信息,请参阅Temporal Cloud文档。

Temporal Service配置由两种类型的配置组成:静态配置和动态配置。

通过加密网络通信并为API调用设置身份验证和授权协议,确保Temporal服务(自托管和Temporal Cloud)的安全。有关设置Temporal Service安全性的详细信息,请参阅Temporal Platform安全功能。

Observability 可观测性

您可以使用自托管的Temporal Service或Temporal Cloud发出的指标来监控和观察性能。

默认情况下,Temporal以Prometheus支持的格式发出metrics。可以使用支持相同格式的任何metrics软件。目前,我们使用以下Prometheus和Grafana版本进行测试,

Prometheus >= v2.0Grafana >= v2.5

Temporal Cloud通过Prometheus HTTP API端点发出metrics,该端点可以直接用作Grafana中的Prometheus数据源,也可以查询Cloud metrics并将其导出到任何可观测性平台。有关云指标和设置的详细信息,请参阅以下内容:

在自托管的Temporal Service上,在Temporal Service configuration中公开Prometheus端点,并配置Prometheus从端点中抓取指标。然后,您可以设置您的可观测性平台(如Grafana),使用Prometheus作为数据源。有关自托管时态服务度量和设置的详细信息,请参阅以下内容:

Multi-Cluster Replication

多集群复制(Multi-Cluster Replication)是一种将Workflow Executions从活动集群异步复制到其他被动集群的功能,用于备份和状态重建。必要时,为了获得更高的可用性,集群操作可以故障转移到任何备份集群。

Temporal的多集群复制功能被认为是实验性的,不受正常版本控制和支持策略的约束。

Temporal会自动将Start、Signal和Query请求转发到活动集群。必须通过每个全局命名空间的动态配置标志启用此功能。启用该功能后,任务将被发送到与该命名空间匹配的父任务队列分区(如果存在)。

所有可见性API都可以用于活动和备用集群。这使得Temporal UI能够无缝地用于全局命名空间。即使全局命名空间处于待机模式,直接对Temporal Visibility API进行API调用的应用程序仍将继续工作。但是,在从备用集群查询Workflow Execution状态时,由于复制延迟,可能会看到延迟。

实践——Temporal 部署

几种部署搭建方式

1.本地调试,使用Temporalite

本地调试一般没有性能和稳定性要求,可以下载运行All in one的Binary:Temporalite。

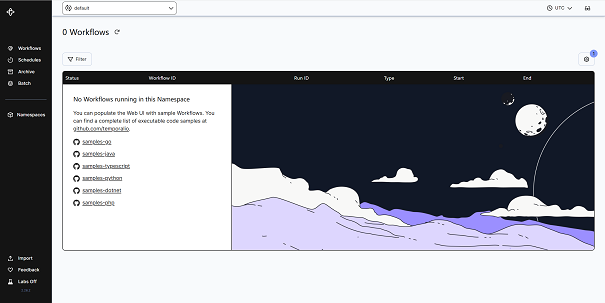

下载Binary添加到Path环境变量后,一行命令启动就能连localhost 7233端口使用Temporal服务,也可以打开浏览器进入Dashboard查看运行状态:http://127.0.0.1:8233。

1

$ temporalite start --namespace default

2.开发或生产环境Helm部署分布式Temporal

Dev/Prod环境建议用Helm + Kubernetes部署,存储层准备独立运维的MySQL或PostgreSQL。 提前创建好数据库,等Helm install完成后,通过admintools进去初始化数据库Schema,

1

2

3

4

5

6

7

8

9

10

11

12

13

14

helm dependency build # optional

helm install -f values/values.mysql.yaml my-temporal . \

--namespace temporal --create-namespace=true

--kube-context *** \

--set elasticsearch.enabled=false \

--set server.config.persistence.default.sql.user=*** \

--set server.config.persistence.default.sql.password="***" \

--set server.config.persistence.visibility.sql.user=*** |

--set server.config.persistence.visibility.sql.password="***" \

--set server.config.persistence.default.sql.host=*** \

--set server.config.persistence.visibility.sql.host=***

# 更新版本执行 helm upgrade 同理

安装如果遇到helm dependency的问题,可以注释掉Prometheus、Grafana、ES等没有用到的依赖Chart。 注意:如果连接AWS Aurora数据库,需要在values.mysql.yaml下面需要加上connectAttributes,

1

2

3

4

5

6

7

server:

config:

persistence:

default:

sql:

connectAttributes:

tx_isolation: 'READ-COMMITTED'

第一次Install后,admintools这个Pod会正常运行,其他Pod会找不到数据库表失败,这时可以进去admintools的Pod shell,执行命令更新DB Schema,参见Schema的源文件。

1

2

3

4

5

6

7

8

9

10

11

export SQL_PLUGIN=mysql

export SQL_HOST=mysql_host

export SQL_PORT=3306

export SQL_USER=mysql_user

export SQL_PASSWORD=mysql_password

cd /etc/temporal/schema/mysql/v57/temporal

temporal-sql-tool --connect-attributes "tx_isolation=READ-COMMITTED" --ep mysql-endpoint -u *** --password "***" --db temporal setup-schema -o -v 0.0 -f ./schema.sql

cd /etc/temporal/schema/mysql/v57/visibility

temporal-sql-tool --connect-attributes "tx_isolation=READ-COMMITTED" --ep mysql-endpoint -u *** --password "***" --db temporal_visibility setup-schema -o -v 0.0 -f ./schema.sql

等其他Pod自动重启或手动删除后,所有Temporal组件都会正常运行,可以Forward一个temporal-web的8080端口,检查Temporal服务是否运行正常。

3. Docker Compose部署

不管是本地调试,还是Dev/Prod环境部署,都可以用docker compose部署,配置可以参考temporalio/docker-compose。如果官方镜像不能满足你的需求,还可以使用temporalio/docker-builds定制自己的docker镜像。

存储层

拿默认的docker-compose-mysql.yml配置文件举例,该配置中的持久化存储层只用到了MySQL,每次执行docker-compose up -d命令启动docker容器时,都会重新启动MySQL容器和Temporal相关容器,而在启动Temporal Server容器时,默认都会重新初始化MySQL Schema和对应元数据。

但这样的数据配置仅能满足Temporal功能体验的需求,实际项目中为了保证数据的完整性和安全性,都会选择单独运维Temporal MySQL数据。这样一来就得修改yaml配置去适配,比如去掉MySQL容器的配置,选择单独运维MySQL;在Temporal容器配置中指定对应的DB ip、port、user、pwd等配置信息,同时指定SKIP_SCHEMA_SETUP=true跳过MySQL schema构建环节等。修改后的配置示例如下,

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

version: "3.5"

services:

# mysql:

# container_name: temporal-mysql-5.7

# environment:

# - MYSQL_ROOT_PASSWORD=root

# image: mysql:${MYSQL_VERSION}

# networks:

# - temporal-network

# ports:

# - 3306:3306

# volumes:

# - /var/lib/mysql

temporal:

container_name: temporal-v1.23.1

# depends_on:

# - mysql

environment:

- DB=mysql

- DB_PORT=3306

- MYSQL_USER=root

- MYSQL_PWD=123456

# - MYSQL_SEEDS=mysql

- MYSQL_SEEDS=172.31.75.79

- DYNAMIC_CONFIG_FILE_PATH=config/dynamicconfig/development-sql.yaml

- SKIP_SCHEMA_SETUP=true

- LOG_LEVEL=debug,info

# - TEMPORAL_CLI_SHOW_STACKS=1

# image: temporalio/auto-setup:${TEMPORAL_VERSION}

image: temporalio/auto-setup-my:${TEMPORAL_VERSION}

networks:

- temporal-network

ports:

- 7233:7233

volumes:

- ./dynamicconfig:/etc/temporal/config/dynamicconfig

labels:

kompose.volume.type: configMap

temporal-admin-tools:

container_name: temporal-admin-tools-v1.23.0

depends_on:

- temporal

environment:

- TEMPORAL_ADDRESS=temporal:7233

- TEMPORAL_CLI_ADDRESS=temporal:7233

image: temporalio/admin-tools:${TEMPORAL_ADMINTOOLS_VERSION}

networks:

- temporal-network

stdin_open: true

tty: true

temporal-ui:

container_name: temporal-ui-v2.17.1

depends_on:

- temporal

environment:

- TEMPORAL_ADDRESS=temporal:7233

- TEMPORAL_CORS_ORIGINS=http://localhost:3000

image: temporalio/ui:${TEMPORAL_UI_VERSION}

networks:

- temporal-network

ports:

- 8080:8080

networks:

temporal-network:

driver: bridge

name: temporal-network

注:

Temporal Server连接MySQL需要使用的连接信息,参见SQL Configuration。Temporal MySQL Connection Config(来自官方文档)

如果公司

MySQL使用了Atlas proxy,上面指定的ip+port是proxy的,在使用proxy连接操作MySQL时,会报错,

但有趣的是,直连

MySQL就没有报错,排查后的结论是,Atlas proxy不支持go prepare。

独立运维MySQL存储组件,需要提前准备好SQL来创建数据库表,详细SQL如下,

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

357

# temporal database

CREATE DATABASE temporal character set utf8;

CREATE TABLE `temporal`.`activity_info_maps` (

`shard_id` int(11) NOT NULL,

`namespace_id` binary(16) NOT NULL,

`workflow_id` varchar(255) NOT NULL,

`run_id` binary(16) NOT NULL,

`schedule_id` bigint(20) NOT NULL,

`data` mediumblob,

`data_encoding` varchar(16) DEFAULT NULL,

PRIMARY KEY (`shard_id`,`namespace_id`,`workflow_id`,`run_id`,`schedule_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`buffered_events` (

`shard_id` int(11) NOT NULL,

`namespace_id` binary(16) NOT NULL,

`workflow_id` varchar(255) NOT NULL,

`run_id` binary(16) NOT NULL,

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`data` mediumblob NOT NULL,

`data_encoding` varchar(16) NOT NULL,

PRIMARY KEY (`shard_id`,`namespace_id`,`workflow_id`,`run_id`,`id`),

UNIQUE KEY `id` (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`build_id_to_task_queue` (

`namespace_id` binary(16) NOT NULL,

`build_id` varchar(255) NOT NULL,

`task_queue_name` varchar(255) NOT NULL,

PRIMARY KEY (`namespace_id`,`build_id`,`task_queue_name`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`child_execution_info_maps` (

`shard_id` int(11) NOT NULL,

`namespace_id` binary(16) NOT NULL,

`workflow_id` varchar(255) NOT NULL,

`run_id` binary(16) NOT NULL,

`initiated_id` bigint(20) NOT NULL,

`data` mediumblob,

`data_encoding` varchar(16) DEFAULT NULL,

PRIMARY KEY (`shard_id`,`namespace_id`,`workflow_id`,`run_id`,`initiated_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`cluster_membership` (

`membership_partition` int(11) NOT NULL,

`host_id` binary(16) NOT NULL,

`rpc_address` varchar(128) DEFAULT NULL,

`rpc_port` smallint(6) NOT NULL,

`role` tinyint(4) NOT NULL,

`session_start` timestamp NOT NULL DEFAULT '1970-01-02 00:00:01',

`last_heartbeat` timestamp NOT NULL DEFAULT '1970-01-02 00:00:01',

`record_expiry` timestamp NOT NULL DEFAULT '1970-01-02 00:00:01',

PRIMARY KEY (`membership_partition`,`host_id`),

KEY `role` (`role`,`host_id`),

KEY `role_2` (`role`,`last_heartbeat`),

KEY `rpc_address` (`rpc_address`,`role`),

KEY `last_heartbeat` (`last_heartbeat`),

KEY `record_expiry` (`record_expiry`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`cluster_metadata` (

`metadata_partition` int(11) NOT NULL,

`data` mediumblob,

`data_encoding` varchar(16) NOT NULL DEFAULT 'Proto3',

`version` bigint(20) NOT NULL DEFAULT '1',

PRIMARY KEY (`metadata_partition`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`cluster_metadata_info` (

`metadata_partition` int(11) NOT NULL,

`cluster_name` varchar(255) NOT NULL,

`data` mediumblob NOT NULL,

`data_encoding` varchar(16) NOT NULL,

`version` bigint(20) NOT NULL,

PRIMARY KEY (`metadata_partition`,`cluster_name`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`current_executions` (

`shard_id` int(11) NOT NULL,

`namespace_id` binary(16) NOT NULL,

`workflow_id` varchar(255) NOT NULL,

`run_id` binary(16) NOT NULL,

`create_request_id` varchar(255) DEFAULT NULL,

`state` int(11) NOT NULL,

`status` int(11) NOT NULL,

`start_version` bigint(20) NOT NULL DEFAULT '0',

`last_write_version` bigint(20) NOT NULL,

PRIMARY KEY (`shard_id`,`namespace_id`,`workflow_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`executions` (

`shard_id` int(11) NOT NULL,

`namespace_id` binary(16) NOT NULL,

`workflow_id` varchar(255) NOT NULL,

`run_id` binary(16) NOT NULL,

`next_event_id` bigint(20) NOT NULL,

`last_write_version` bigint(20) NOT NULL,

`data` mediumblob,

`data_encoding` varchar(16) NOT NULL,

`state` mediumblob,

`state_encoding` varchar(16) NOT NULL,

`db_record_version` bigint(20) NOT NULL DEFAULT '0',

PRIMARY KEY (`shard_id`,`namespace_id`,`workflow_id`,`run_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`history_immediate_tasks` (

`shard_id` int(11) NOT NULL,

`category_id` int(11) NOT NULL,

`task_id` bigint(20) NOT NULL,

`data` mediumblob NOT NULL,

`data_encoding` varchar(16) NOT NULL,

PRIMARY KEY (`shard_id`,`category_id`,`task_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`history_node` (

`shard_id` int(11) NOT NULL,

`tree_id` binary(16) NOT NULL,

`branch_id` binary(16) NOT NULL,

`node_id` bigint(20) NOT NULL,

`txn_id` bigint(20) NOT NULL,

`data` mediumblob NOT NULL,

`data_encoding` varchar(16) NOT NULL,

`prev_txn_id` bigint(20) NOT NULL DEFAULT '0',

PRIMARY KEY (`shard_id`,`tree_id`,`branch_id`,`node_id`,`txn_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`history_scheduled_tasks` (

`shard_id` int(11) NOT NULL,

`category_id` int(11) NOT NULL,

`visibility_timestamp` datetime(6) NOT NULL,

`task_id` bigint(20) NOT NULL,

`data` mediumblob NOT NULL,

`data_encoding` varchar(16) NOT NULL,

PRIMARY KEY (`shard_id`,`category_id`,`visibility_timestamp`,`task_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`history_tree` (

`shard_id` int(11) NOT NULL,

`tree_id` binary(16) NOT NULL,

`branch_id` binary(16) NOT NULL,

`data` mediumblob,

`data_encoding` varchar(16) NOT NULL,

PRIMARY KEY (`shard_id`,`tree_id`,`branch_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`namespace_metadata` (

`partition_id` int(11) NOT NULL,

`notification_version` bigint(20) NOT NULL,

PRIMARY KEY (`partition_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`namespaces` (

`partition_id` int(11) NOT NULL,

`id` binary(16) NOT NULL,

`name` varchar(255) NOT NULL,

`notification_version` bigint(20) NOT NULL,

`data` mediumblob,

`data_encoding` varchar(16) NOT NULL,

`is_global` tinyint(1) NOT NULL,

PRIMARY KEY (`partition_id`,`id`),

UNIQUE KEY `name` (`name`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`queue` (

`queue_type` int(11) NOT NULL,

`message_id` bigint(20) NOT NULL,

`message_payload` mediumblob,

`message_encoding` varchar(16) NOT NULL DEFAULT 'Json',

PRIMARY KEY (`queue_type`,`message_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`queue_messages` (

`queue_type` int(11) NOT NULL,

`queue_name` varchar(255) NOT NULL,

`queue_partition` bigint(20) NOT NULL,

`message_id` bigint(20) NOT NULL,

`message_payload` mediumblob NOT NULL,

`message_encoding` varchar(16) NOT NULL,

PRIMARY KEY (`queue_type`,`queue_name`,`queue_partition`,`message_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`queue_metadata` (

`queue_type` int(11) NOT NULL,

`data` mediumblob,

`data_encoding` varchar(16) NOT NULL DEFAULT 'Json',

`version` bigint(20) NOT NULL DEFAULT '0',

PRIMARY KEY (`queue_type`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`queues` (

`queue_type` int(11) NOT NULL,

`queue_name` varchar(255) NOT NULL,

`metadata_payload` mediumblob NOT NULL,

`metadata_encoding` varchar(16) NOT NULL,

PRIMARY KEY (`queue_type`,`queue_name`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`replication_tasks` (

`shard_id` int(11) NOT NULL,

`task_id` bigint(20) NOT NULL,

`data` mediumblob,

`data_encoding` varchar(16) NOT NULL,

PRIMARY KEY (`shard_id`,`task_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`replication_tasks_dlq` (

`source_cluster_name` varchar(255) NOT NULL,

`shard_id` int(11) NOT NULL,

`task_id` bigint(20) NOT NULL,

`data` mediumblob,

`data_encoding` varchar(16) NOT NULL,

PRIMARY KEY (`source_cluster_name`,`shard_id`,`task_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`request_cancel_info_maps` (

`shard_id` int(11) NOT NULL,

`namespace_id` binary(16) NOT NULL,

`workflow_id` varchar(255) NOT NULL,

`run_id` binary(16) NOT NULL,

`initiated_id` bigint(20) NOT NULL,

`data` mediumblob,

`data_encoding` varchar(16) DEFAULT NULL,

PRIMARY KEY (`shard_id`,`namespace_id`,`workflow_id`,`run_id`,`initiated_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`schema_update_history` (

`version_partition` int(11) NOT NULL,

`year` int(11) NOT NULL,

`month` int(11) NOT NULL,

`update_time` datetime(6) NOT NULL,

`description` varchar(255) DEFAULT NULL,

`manifest_md5` varchar(64) DEFAULT NULL,

`new_version` varchar(64) DEFAULT NULL,

`old_version` varchar(64) DEFAULT NULL,

PRIMARY KEY (`version_partition`,`year`,`month`,`update_time`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`schema_version` (

`version_partition` int(11) NOT NULL,

`db_name` varchar(255) NOT NULL,

`creation_time` datetime(6) DEFAULT NULL,

`curr_version` varchar(64) DEFAULT NULL,

`min_compatible_version` varchar(64) DEFAULT NULL,

PRIMARY KEY (`version_partition`,`db_name`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

INSERT INTO `temporal`.`schema_version` (version_partition, db_name, creation_time, curr_version, min_compatible_version) VALUES (0, "temporal", CURRENT_TIMESTAMP, "1.11", "1.0");

CREATE TABLE `temporal`.`shards` (

`shard_id` int(11) NOT NULL,

`range_id` bigint(20) NOT NULL,

`data` mediumblob,

`data_encoding` varchar(16) NOT NULL,

PRIMARY KEY (`shard_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`signal_info_maps` (

`shard_id` int(11) NOT NULL,

`namespace_id` binary(16) NOT NULL,

`workflow_id` varchar(255) NOT NULL,

`run_id` binary(16) NOT NULL,

`initiated_id` bigint(20) NOT NULL,

`data` mediumblob,

`data_encoding` varchar(16) DEFAULT NULL,

PRIMARY KEY (`shard_id`,`namespace_id`,`workflow_id`,`run_id`,`initiated_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`signals_requested_sets` (

`shard_id` int(11) NOT NULL,

`namespace_id` binary(16) NOT NULL,

`workflow_id` varchar(255) NOT NULL,

`run_id` binary(16) NOT NULL,

`signal_id` varchar(255) NOT NULL,

PRIMARY KEY (`shard_id`,`namespace_id`,`workflow_id`,`run_id`,`signal_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`task_queue_user_data` (

`namespace_id` binary(16) NOT NULL,

`task_queue_name` varchar(255) NOT NULL,

`data` mediumblob NOT NULL,

`data_encoding` varchar(16) NOT NULL,

`version` bigint(20) NOT NULL,

PRIMARY KEY (`namespace_id`,`task_queue_name`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`task_queues` (

`range_hash` int(10) unsigned NOT NULL,

`task_queue_id` varbinary(272) NOT NULL,

`range_id` bigint(20) NOT NULL,

`data` mediumblob,

`data_encoding` varchar(16) NOT NULL,

PRIMARY KEY (`range_hash`,`task_queue_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`tasks` (

`range_hash` int(10) unsigned NOT NULL,

`task_queue_id` varbinary(272) NOT NULL,

`task_id` bigint(20) NOT NULL,

`data` mediumblob,

`data_encoding` varchar(16) NOT NULL,

PRIMARY KEY (`range_hash`,`task_queue_id`,`task_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`timer_info_maps` (

`shard_id` int(11) NOT NULL,

`namespace_id` binary(16) NOT NULL,

`workflow_id` varchar(255) NOT NULL,

`run_id` binary(16) NOT NULL,

`timer_id` varchar(255) NOT NULL,

`data` mediumblob,

`data_encoding` varchar(16) DEFAULT NULL,

PRIMARY KEY (`shard_id`,`namespace_id`,`workflow_id`,`run_id`,`timer_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`timer_tasks` (

`shard_id` int(11) NOT NULL,

`visibility_timestamp` datetime(6) NOT NULL,

`task_id` bigint(20) NOT NULL,

`data` mediumblob,

`data_encoding` varchar(16) NOT NULL,

PRIMARY KEY (`shard_id`,`visibility_timestamp`,`task_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`transfer_tasks` (

`shard_id` int(11) NOT NULL,

`task_id` bigint(20) NOT NULL,

`data` mediumblob,

`data_encoding` varchar(16) NOT NULL,

PRIMARY KEY (`shard_id`,`task_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal`.`visibility_tasks` (

`shard_id` int(11) NOT NULL,

`task_id` bigint(20) NOT NULL,

`data` mediumblob NOT NULL,

`data_encoding` varchar(16) NOT NULL,

PRIMARY KEY (`shard_id`,`task_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

INSERT INTO `temporal`.`schema_update_history` (version_partition, year, month, update_time, description, manifest_md5, new_version, old_version) VALUES

(0, 2025, 9, CURRENT_TIMESTAMP, "initial version", "", "0.0", "0"),

(0, 2025, 9, CURRENT_TIMESTAMP+1, "base version of schema", "55b84ca114ac34d84bdc5f52c198fa33", "1.0", "0.0"),

(0, 2025, 9, CURRENT_TIMESTAMP+2, "schema update for cluster metadata", "58f06841bbb187cb210db32a090c21ee", "1.1", "1.0"),

(0, 2025, 9, CURRENT_TIMESTAMP+3, "schema update for RPC replication and blob size adjustments", "d0980c1ffb9ffa6e3ab6f84e285ffa9d", "1.2", "1.1"),

(0, 2025, 9, CURRENT_TIMESTAMP+4, "schema update for kafka deprecation", "3beee7d470421674194475f94b58d89b", "1.3", "1.2"),

(0, 2025, 9, CURRENT_TIMESTAMP+5, "schema update for cluster metadata cleanup", "c53e2e9cea5660c8a1f3b2ac73cdb138", "1.4", "1.3"),

(0, 2025, 9, CURRENT_TIMESTAMP+6, "schema update for cluster_membership, executions and history_node tables", "bfb307ba10ac0fdec83e0065dc5ffee4", "1.5", "1.4"),

(0, 2025, 9, CURRENT_TIMESTAMP+7, "schema update for queue_metadata", "978e1a6500d377ba91c6e37e5275a59b", "1.6", "1.5"),

(0, 2025, 9, CURRENT_TIMESTAMP+8, "create cluster metadata info table to store cluster information and executions to store tiered storage queue", "366b8b49d6701a6a09778e51ad1682ed", "1.7", "1.6"),

(0, 2025, 9, CURRENT_TIMESTAMP+9, "drop unused tasks table; expand VARCHAR columns governed by maxIDLength to VARCHAR(255)", "bc0761b792a339f7e1e29e00c4fd3e64", "1.8", "1.7"),

(0, 2025, 9, CURRENT_TIMESTAMP+10, "add history tasks table", "b62e4e5826967e152e00b75da42d12ea", "1.9", "1.8"),

(0, 2025, 9, CURRENT_TIMESTAMP+11, "add storage for update records and create task_queue_user_data table", "2b0c361b0d4ab7cf09ead5566f0db520", "1.10", "1.9"),

(0, 2025, 9, CURRENT_TIMESTAMP+12, "add queues and queue_messages tables", "94a91a900aa29ec3d81eb361ab7770be", "1.11", "1.10");

INSERT INTO `temporal`.`namespace_metadata` (partition_id, notification_version) VALUES (54321, 1);

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

# temporal_visibility database

CREATE DATABASE temporal_visibility character set utf8;

CREATE TABLE `temporal_visibility`.`executions_visibility` (

`namespace_id` char(64) NOT NULL,

`run_id` char(64) NOT NULL,

`start_time` datetime(6) NOT NULL,

`execution_time` datetime(6) NOT NULL,

`workflow_id` varchar(255) NOT NULL,

`workflow_type_name` varchar(255) NOT NULL,

`status` int(11) NOT NULL,

`close_time` datetime(6) DEFAULT NULL,

`history_length` bigint(20) DEFAULT NULL,

`memo` blob,

`encoding` varchar(64) NOT NULL,

`task_queue` varchar(255) NOT NULL DEFAULT '',

PRIMARY KEY (`namespace_id`,`run_id`),

KEY `by_type_start_time` (`namespace_id`,`workflow_type_name`,`status`,`start_time`,`run_id`),

KEY `by_workflow_id_start_time` (`namespace_id`,`workflow_id`,`status`,`start_time`,`run_id`),

KEY `by_status_by_start_time` (`namespace_id`,`status`,`start_time`,`run_id`),

KEY `by_type_close_time` (`namespace_id`,`workflow_type_name`,`status`,`close_time`,`run_id`),

KEY `by_workflow_id_close_time` (`namespace_id`,`workflow_id`,`status`,`close_time`,`run_id`),

KEY `by_status_by_close_time` (`namespace_id`,`status`,`close_time`,`run_id`),

KEY `by_close_time_by_status` (`namespace_id`,`close_time`,`run_id`,`status`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal_visibility`.`schema_update_history` (

`version_partition` int(11) NOT NULL,

`year` int(11) NOT NULL,

`month` int(11) NOT NULL,

`update_time` datetime(6) NOT NULL,

`description` varchar(255) DEFAULT NULL,

`manifest_md5` varchar(64) DEFAULT NULL,

`new_version` varchar(64) DEFAULT NULL,

`old_version` varchar(64) DEFAULT NULL,

PRIMARY KEY (`version_partition`,`year`,`month`,`update_time`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `temporal_visibility`.`schema_version` (

`version_partition` int(11) NOT NULL,

`db_name` varchar(255) NOT NULL,

`creation_time` datetime(6) DEFAULT NULL,

`curr_version` varchar(64) DEFAULT NULL,

`min_compatible_version` varchar(64) DEFAULT NULL,

PRIMARY KEY (`version_partition`,`db_name`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

INSERT INTO `temporal_visibility`.`schema_version` (version_partition, db_name, creation_time, curr_version, min_compatible_version) VALUES (0, "temporal_visibility", CURRENT_TIMESTAMP, "1.1", "1.0");

INSERT INTO `temporal_visibility`.`schema_update_history` (version_partition, year, month, update_time, description, manifest_md5, new_version, old_version) VALUES

(0, 2025, 9, CURRENT_TIMESTAMP, "initial version", "", "0.0", "0"),

(0, 2025, 9, CURRENT_TIMESTAMP+1, "base version of visibility schema", "698373883c1c0dd44607a446a62f2a79", "1.0", "0.0"),

(0, 2025, 9, CURRENT_TIMESTAMP+2, "add close time & status index", "e286f8af0a62e291b35189ce29d3fff3", "1.1", "1.0");

组件选型

如果你的公司MySQL标准只支持MySQL 5.7,不支持MySQL 8。为了适配支持MySQL 5.7,需要选择支持MySQL 5.7的组件最新Tag,如下,

| 组件 | tag | remark |

|---|---|---|

| temporal auto-setup | 1.23.1 | Temporal 1.24.0(1.23.1的下一版本)开始,temporal源码mysql schema已经没有5.7的版本了temporalio/temporal temporal v1.23.1 temporal/proto/api docker-build release/v1.23.x |

| temporal-admin-tools | 1.23.0 | admin-tools 1.23.1不存在,选择1.23.0 |

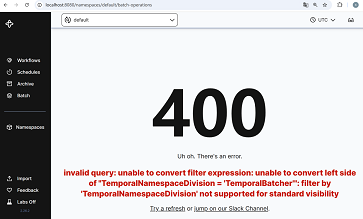

| temporal-ui | 2.17.1 | UI从2.19.0(2.17.1的下一版本)开始,增加BatchOperation功能页,这个功能页只支持MySQL8,不支持MySQL 5.7;官方和 Temporal 1.23.1适配的UI Version为2.26.2,如果可以容忍BatchOperation功能页存在,但报错400,则可以使用官方适配的UI版本。temporalio/ui temporalio/ui-server |

Temporal Github

| topic | remark | commit_id |

|---|---|---|

| Bump Server version to 1.23.0 | Temporal 1.23.0 支持MySQL 5.7 | 668a6379f097903082d55286a957fd3e4c01c3d5 |

| Bump Server version to 1.23.1 | Temporal 1.23.1 支持MySQL 5.7 | fad6bdc0e9c0911f28829f3c47285357554e2567 |

| Bump Server version to 1.24.0 | Temporal 1.24.0 不支持MySQL 5.7 | 04b6c5e9fcd8ab9d1505c0ccfce802817d0d6e5e |

MySQL 5.7 Schema

| topic | link |

|---|---|

| MySQL 5.7 Schema Temporal 1.23.0 | Temporal 1.23.0 MySQL 5.7 Schema |

| MySQL 5.7 Schema Temporal 1.23.1 | Temporal 1.23.1 MySQL 5.7 Schema |

Docker Compose Github

| topic | remark | commit_id |

|---|---|---|

| Update MySQL and PosgreSQL to the latest (#55) | 升级MySQL版本(5.7->8) 升级postgres版本(9.6->13) 此时Temporal版本如下, temporalio/auto-setup:1.13.1 temporalio/admin-tools:1.13.1 temporalio/web:1.13.0 | 79c581ff1fea7afd1938cc9fb3b33ccf434c1108 |

| Elasticsearch should use version 7.16.2 or newer (#57) | 升级ES | ad1de17c82e20b833fa48d415506025df1c70f85 |

| moving to .env file to manage tags (#73) | 在.env统一管理image tag | 8700c7b0ba0dc8c6491672b39efd5f15b3814484 |

| Update configs removing standard visibility support (#205) | 这个commit之后的版本(包含这个版本),docker-compose开始不支持MySQL 5.7 此时Temporal版本如下, temporalio/auto-setup: 1.23.0 temporal-admin-tools: 1.23.0 temporalio/ui:2.26.2 Temporal 1.23.0的schema还存在MySQL 5.7版本 | 1fff9dd2ffc584ae4be1e8531a83a5905ea12306 |

| Bump Server version to 1.23.1 | 从这个commit开始,docker-compose中只有MySQL8,但是Temporal的schema还存在MySQL 5.7版本 此时Temporal版本如下, temporalio/auto-setup: 1.23.1 temporal-admin-tools: 1.23.1 temporalio/ui: 2.26.2 | 54934438b8f0f020c9682efd3f2731025ad10dce |

| Bump Server version to 1.24.0 | 此时Temporal版本如下, temporalio/auto-setup: 1.24.0 temporal-admin-tools: 1.24.0-tctl-1.18.1-cli-0.12.0 temporalio/ui: 2.26.2 Temporal 1.24.0的schema已经不存在MySQL 5.7版本了 | 1f95f487f846c2565f984645b79f3b2c5b469e07 52f74c55f33ba4354bb7c0dbe5691e525ce463cc |

docker-build

比如上述Atlas proxy不支持go prepare,需要二次定制开发temporal的MySQL驱动层代码来进行适配,自然也得重新对temporal auto-setup模块重新打镜像。

| docker-build | docker-build github |

| docker-build v1.23.x | v1.23.x对应的docker-build库 |

| CLI | Use the CLI to run a Temporal Server and interact with it. |

| tctl | The Temporal CLI is a command-line tool you can use to perform various tasks on a Temporal Server. |

附录

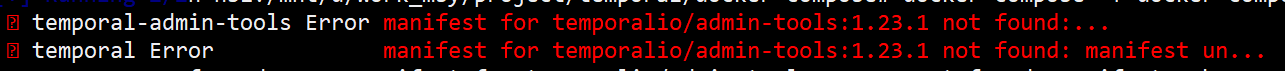

A. Temporal 1.23.1使用MySQL 5.7,遇到的问题

| MYSQL_VERSION | 5.7 |

| TEMPORAL_VERSION | 1.23.1 |

| TEMPORAL_ADMINTOOLS_VERSION | |

| TEMPORAL_UI_VERSION | 2.26.2 |

TEMPORAL_ADMINTOOLS_VERSION改成1.23.0后,正常启动,但BatchOperation功能页不可用。

| TEMPORAL_ADMINTOOLS_VERSION | 1.23.0 |

MYSQL_VERSION改成v8,BatchOperation没有问题。

| MYSQL_VERSION | 8 |

B. Temporal 1.23.0使用MySQL 5.7,遇到的问题

| MYSQL_VERSION | 5.7 |

| TEMPORAL_VERSION | 1.23.0 |

| TEMPORAL_UI_VERSION | 2.26.2 |

BatchOperation功能页不可用。

C. Temporal 1.22.4使用MySQL 5.7,遇到的问题

| MYSQL_VERSION | 5.7 |

| TEMPORAL_VERSION | 1.22.4 |

| TEMPORAL_UI_VERSION | 2.21.0 |

BatchOperation功能页不可用。

D. Temporal 1.22.1使用MySQL 5.7,遇到的问题

| MYSQL_VERSION | 5.7 |

| TEMPORAL_VERSION | 1.22.1 |

| TEMPORAL_UI_VERSION | 2.19.0 |

BatchOperation功能页不可用。

E. Temporal 1.22.0,UI 2.17.1,使用MySQL 5.7

| MYSQL_VERSION | 5.7 |

| TEMPORAL_VERSION | 1.22.0 |

| EMPORAL_UI_VERSION | 2.17.1 |

F. Temporal 1.21.0使用MySQL 5.7

| MYSQL_VERSION | 5.7 |

| TEMPORAL_VERSION | 1.21.0 |

| TEMPORAL_UI_VERSION | 2.16.1 |

部署运行

1. 验证MySQL schema

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

mysql> show databases;

+---------------------+

| Database |

+---------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

| temporal |

| temporal_visibility |

+---------------------+

10 rows in set (0.01 sec)

mysql> use temporal;

mysql> show tables;

+---------------------------+

| Tables_in_temporal |

+---------------------------+

| activity_info_maps |

| buffered_events |

| build_id_to_task_queue |

| child_execution_info_maps |

| cluster_membership |

| cluster_metadata |

| cluster_metadata_info |

| current_executions |

| executions |

| history_immediate_tasks |

| history_node |

| history_scheduled_tasks |

| history_tree |

| namespace_metadata |

| namespaces |

| queue |

| queue_messages |

| queue_metadata |

| queues |

| replication_tasks |

| replication_tasks_dlq |

| request_cancel_info_maps |

| schema_update_history |

| schema_version |

| shards |

| signal_info_maps |

| signals_requested_sets |

| task_queue_user_data |

| task_queues |

| tasks |

| timer_info_maps |

| timer_tasks |

| transfer_tasks |

| visibility_tasks |

+---------------------------+

34 rows in set (0.00 sec)

mysql> use temporal_visibility;

mysql> show tables;

+-------------------------------+

| Tables_in_temporal_visibility |

+-------------------------------+

| executions_visibility |

| schema_update_history |

| schema_version |

+-------------------------------+

3 rows in set (0.00 sec)

2. 启动docker,输出下面的log说明成功了

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

# docker-compose -f docker-compose-mysql.yml up -d

[+] Running 4/4

✔ Network temporal-network Created 0.0s

✔ Container temporal-v1.23.1 Started 0.3s

✔ Container temporal-admin-tools-v1.23.0 Started 0.4s

✔ Container temporal-ui-v2.17.1 Started 0.5s

# docker-compose ps

NAME IMAGE COMMAND SERVICE CREATED STATUS PORTS

temporal-admin-tools-v1.23.0 temporalio/admin-tools:1.23.0 "tini -- sleep infin…" temporal-admin-tools 3 seconds ago Up 2 seconds

temporal-ui-v2.17.1 temporalio/ui:2.26.2 "./start-ui-server.sh" temporal-ui 3 seconds ago Up 2 seconds 0.0.0.0:8080->8080/tcp

temporal-v1.23.1 temporalio/auto-setup-my:1.23.1 "/etc/temporal/entry…" temporal 3 seconds ago Up 3 seconds 6933-6935/tcp, 6939/tcp, 7234-7235/tcp, 7239/tcp, 0.0.0.0:7233->7233/tcp

# docker logs temporal-v1.23.1

2025/09/30 09:29:24 Loading config; env=docker,zone=,configDir=config

2025/09/30 09:29:24 Loading config files=[config/docker.yaml]

TEMPORAL_ADDRESS is not set, setting it to 172.19.0.2:7233

Temporal CLI address: 172.19.0.2:7233.

{"level":"info","ts":"2025-09-30T09:29:24.092Z","msg":"Build info.","git-time":"2024-04-30T17:37:12.000Z","git-revision":"e7a73a0315dafc161dca23e9df78f8355e1e5ae7","git-modified":true,"go-arch":"amd64","go-os":"linux","go-version":"go1.24.0","cgo-enabled":false,"server-version":"1.23.1","debug-mode":false,"logging-call-at":"main.go:148"}

{"level":"info","ts":"2025-09-30T09:29:24.096Z","msg":"dynamic config changed for the key: limit.maxidlength oldValue: nil newValue: { constraints: {} value: 255 }","logging-call-at":"file_based_client.go:275"}

{"level":"info","ts":"2025-09-30T09:29:24.096Z","msg":"dynamic config changed for the key: system.forcesearchattributescacherefreshonread oldValue: nil newValue: { constraints: {} value: true }","logging-call-at":"file_based_client.go:275"}

{"level":"info","ts":"2025-09-30T09:29:24.096Z","msg":"Updated dynamic config","logging-call-at":"file_based_client.go:195"}

{"level":"warn","ts":"2025-09-30T09:29:24.096Z","msg":"Not using any authorizer and flag `--allow-no-auth` not detected. Future versions will require using the flag `--allow-no-auth` if you do not want to set an authorizer.","logging-call-at":"main.go:178"}

Waiting for Temporal server to start...

{"level":"info","ts":"2025-09-30T09:29:24.116Z","msg":"Use rpc address 127.0.0.1:7233 for cluster active.","component":"metadata-initializer","logging-call-at":"fx.go:724"}

{"level":"info","ts":"2025-09-30T09:29:24.176Z","msg":"historyClient: ownership caching disabled","service":"history","logging-call-at":"client.go:91"}

{"level":"info","ts":"2025-09-30T09:29:24.184Z","msg":"creating new visibility manager","visibility_plugin_name":"mysql","visibility_index_name":"","logging-call-at":"factory.go:166"}

{"level":"info","ts":"2025-09-30T09:29:24.187Z","msg":"Initialized lazy loaded OwnershipBasedQuotaScaler","service":"history","service":"history","logging-call-at":"fx.go:95"}

{"level":"info","ts":"2025-09-30T09:29:24.187Z","msg":"Initialized service resolver for persistence rate limiting","service":"history","service":"history","logging-call-at":"fx.go:92"}

{"level":"info","ts":"2025-09-30T09:29:24.187Z","msg":"Created gRPC listener","service":"history","address":"172.19.0.2:7234","logging-call-at":"rpc.go:153"}

{"level":"info","ts":"2025-09-30T09:29:24.197Z","msg":"Initialized service resolver for persistence rate limiting","service":"matching","service":"matching","logging-call-at":"fx.go:92"}

{"level":"info","ts":"2025-09-30T09:29:24.197Z","msg":"Created gRPC listener","service":"matching","address":"172.19.0.2:7235","logging-call-at":"rpc.go:153"}

{"level":"info","ts":"2025-09-30T09:29:24.197Z","msg":"historyClient: ownership caching disabled","service":"matching","logging-call-at":"client.go:91"}

{"level":"info","ts":"2025-09-30T09:29:24.201Z","msg":"creating new visibility manager","visibility_plugin_name":"mysql","visibility_index_name":"","logging-call-at":"factory.go:166"}

{"level":"info","ts":"2025-09-30T09:29:24.212Z","msg":"Initialized service resolver for persistence rate limiting","service":"frontend","service":"frontend","logging-call-at":"fx.go:92"}

{"level":"info","ts":"2025-09-30T09:29:24.212Z","msg":"historyClient: ownership caching disabled","service":"frontend","logging-call-at":"client.go:91"}

{"level":"info","ts":"2025-09-30T09:29:24.212Z","msg":"Created gRPC listener","service":"frontend","address":"172.19.0.2:7233","logging-call-at":"rpc.go:153"}

{"level":"info","ts":"2025-09-30T09:29:24.216Z","msg":"creating new visibility manager","visibility_plugin_name":"mysql","visibility_index_name":"","logging-call-at":"factory.go:166"}

{"level":"info","ts":"2025-09-30T09:29:24.216Z","msg":"Service is not requested, skipping initialization.","service":"internal-frontend","logging-call-at":"fx.go:522"}

{"level":"info","ts":"2025-09-30T09:29:24.223Z","msg":"Initialized service resolver for persistence rate limiting","service":"worker","service":"worker","logging-call-at":"fx.go:92"}

{"level":"info","ts":"2025-09-30T09:29:24.224Z","msg":"historyClient: ownership caching disabled","service":"worker","logging-call-at":"client.go:91"}

{"level":"info","ts":"2025-09-30T09:29:24.226Z","msg":"creating new visibility manager","visibility_plugin_name":"mysql","visibility_index_name":"","logging-call-at":"factory.go:166"}

{"level":"info","ts":"2025-09-30T09:29:24.227Z","msg":"PProf not started due to port not set","logging-call-at":"pprof.go:68"}

{"level":"info","ts":"2025-09-30T09:29:24.227Z","msg":"Starting server for services","value":{"frontend":{},"history":{},"matching":{},"worker":{}},"logging-call-at":"server_impl.go:93"}

{"level":"info","ts":"2025-09-30T09:29:24.234Z","msg":"fifo scheduler started","service":"history","logging-call-at":"fifo_scheduler.go:96"}

{"level":"info","ts":"2025-09-30T09:29:24.234Z","msg":"interleaved weighted round robin task scheduler started","service":"history","logging-call-at":"interleaved_weighted_round_robin.go:129"}

{"level":"info","ts":"2025-09-30T09:29:24.235Z","msg":"fifo scheduler started","service":"history","logging-call-at":"fifo_scheduler.go:96"}

{"level":"info","ts":"2025-09-30T09:29:24.235Z","msg":"interleaved weighted round robin task scheduler started","service":"history","logging-call-at":"interleaved_weighted_round_robin.go:129"}

{"level":"info","ts":"2025-09-30T09:29:24.236Z","msg":"fifo scheduler started","service":"history","component":"memory-scheduled-queue-processor","logging-call-at":"fifo_scheduler.go:96"}

{"level":"info","ts":"2025-09-30T09:29:24.237Z","msg":"fifo scheduler started","service":"history","logging-call-at":"fifo_scheduler.go:96"}

{"level":"info","ts":"2025-09-30T09:29:24.237Z","msg":"RuntimeMetricsReporter started","service":"worker","logging-call-at":"runtime.go:138"}

{"level":"info","ts":"2025-09-30T09:29:24.237Z","msg":"RuntimeMetricsReporter started","service":"frontend","logging-call-at":"runtime.go:138"}

{"level":"info","ts":"2025-09-30T09:29:24.237Z","msg":"RuntimeMetricsReporter started","service":"matching","logging-call-at":"runtime.go:138"}

{"level":"info","ts":"2025-09-30T09:29:24.237Z","msg":"worker starting","service":"worker","component":"worker","logging-call-at":"service.go:343"}

{"level":"info","ts":"2025-09-30T09:29:24.237Z","msg":"frontend starting","service":"frontend","logging-call-at":"service.go:350"}

{"level":"info","ts":"2025-09-30T09:29:24.237Z","msg":"interleaved weighted round robin task scheduler started","service":"history","logging-call-at":"interleaved_weighted_round_robin.go:129"}

{"level":"info","ts":"2025-09-30T09:29:24.237Z","msg":"matching starting","service":"matching","logging-call-at":"service.go:90"}

{"level":"info","ts":"2025-09-30T09:29:24.237Z","msg":"Starting to serve on frontend listener","service":"frontend","logging-call-at":"service.go:368"}

{"level":"info","ts":"2025-09-30T09:29:24.237Z","msg":"Starting to serve on matching listener","service":"matching","logging-call-at":"service.go:102"}

{"level":"info","ts":"2025-09-30T09:29:24.238Z","msg":"fifo scheduler started","service":"history","logging-call-at":"fifo_scheduler.go:96"}

{"level":"info","ts":"2025-09-30T09:29:24.238Z","msg":"interleaved weighted round robin task scheduler started","service":"history","logging-call-at":"interleaved_weighted_round_robin.go:129"}

{"level":"info","ts":"2025-09-30T09:29:24.239Z","msg":"RuntimeMetricsReporter started","service":"history","logging-call-at":"runtime.go:138"}

{"level":"info","ts":"2025-09-30T09:29:24.240Z","msg":"sequential scheduler started","logging-call-at":"sequential_scheduler.go:96"}

{"level":"info","ts":"2025-09-30T09:29:24.240Z","msg":"history starting","service":"history","logging-call-at":"service.go:90"}

{"level":"info","ts":"2025-09-30T09:29:24.240Z","msg":"Replication task fetchers started.","logging-call-at":"task_fetcher.go:142"}

{"level":"info","ts":"2025-09-30T09:29:24.240Z","msg":"none","component":"shard-controller","address":"172.19.0.2:7234","lifecycle":"Started","logging-call-at":"controller_impl.go:135"}

{"level":"info","ts":"2025-09-30T09:29:24.240Z","msg":"Starting to serve on history listener","service":"history","logging-call-at":"service.go:101"}

{"level":"info","ts":"2025-09-30T09:29:24.242Z","msg":"Membership heartbeat upserted successfully","address":"172.19.0.2","port":6935,"hostId":"f3cbc5eb-9ddf-11f0-adc1-a6a295d3aa2b","logging-call-at":"monitor.go:306"}

{"level":"info","ts":"2025-09-30T09:29:24.246Z","msg":"bootstrap hosts fetched","bootstrap-hostports":"172.19.0.2:6934,172.19.0.2:6935","logging-call-at":"monitor.go:348"}

{"level":"info","ts":"2025-09-30T09:29:24.246Z","msg":"Membership heartbeat upserted successfully","address":"172.19.0.2","port":6934,"hostId":"f3c10e5a-9ddf-11f0-adc1-a6a295d3aa2b","logging-call-at":"monitor.go:306"}

{"level":"info","ts":"2025-09-30T09:29:24.248Z","msg":"bootstrap hosts fetched","bootstrap-hostports":"172.19.0.2:6934,172.19.0.2:6935","logging-call-at":"monitor.go:348"}

{"level":"info","ts":"2025-09-30T09:29:24.249Z","msg":"Current reachable members","component":"service-resolver","service":"history","addresses":["172.19.0.2:7234"],"logging-call-at":"service_resolver.go:275"}

{"level":"info","ts":"2025-09-30T09:29:24.249Z","msg":"none","component":"shard-controller","address":"172.19.0.2:7234","component":"shard-controller","address":"172.19.0.2:7234","shard-update":"RingMembershipChangedEvent","number-processed":1,"number-deleted":0,"logging-call-at":"ownership.go:116"}

{"level":"info","ts":"2025-09-30T09:29:24.249Z","msg":"none","shard-id":1,"address":"172.19.0.2:7234","lifecycle":"Started","component":"shard-context","logging-call-at":"context_impl.go:1563"}

{"level":"info","ts":"2025-09-30T09:29:24.249Z","msg":"none","component":"shard-controller","address":"172.19.0.2:7234","numShards":1,"logging-call-at":"controller_impl.go:285"}

{"level":"info","ts":"2025-09-30T09:29:24.249Z","msg":"none","shard-id":4,"address":"172.19.0.2:7234","lifecycle":"Started","component":"shard-context","logging-call-at":"context_impl.go:1563"}

{"level":"info","ts":"2025-09-30T09:29:24.249Z","msg":"none","component":"shard-controller","address":"172.19.0.2:7234","numShards":2,"logging-call-at":"controller_impl.go:285"}

{"level":"info","ts":"2025-09-30T09:29:24.249Z","msg":"none","shard-id":2,"address":"172.19.0.2:7234","lifecycle":"Started","component":"shard-context","logging-call-at":"context_impl.go:1563"}

{"level":"info","ts":"2025-09-30T09:29:24.249Z","msg":"none","component":"shard-controller","address":"172.19.0.2:7234","numShards":3,"logging-call-at":"controller_impl.go:285"}

{"level":"info","ts":"2025-09-30T09:29:24.249Z","msg":"none","shard-id":3,"address":"172.19.0.2:7234","lifecycle":"Started","component":"shard-context","logging-call-at":"context_impl.go:1563"}

{"level":"info","ts":"2025-09-30T09:29:24.249Z","msg":"none","component":"shard-controller","address":"172.19.0.2:7234","numShards":4,"logging-call-at":"controller_impl.go:285"}

{"level":"info","ts":"2025-09-30T09:29:24.253Z","msg":"Membership heartbeat upserted successfully","address":"172.19.0.2","port":6933,"hostId":"f3ce1151-9ddf-11f0-adc1-a6a295d3aa2b","logging-call-at":"monitor.go:306"}

{"level":"info","ts":"2025-09-30T09:29:24.254Z","msg":"bootstrap hosts fetched","bootstrap-hostports":"172.19.0.2:6935,172.19.0.2:6933,172.19.0.2:6934","logging-call-at":"monitor.go:348"}

{"level":"info","ts":"2025-09-30T09:29:24.256Z","msg":"Current reachable members","component":"service-resolver","service":"history","addresses":["172.19.0.2:7234"],"logging-call-at":"service_resolver.go:275"}

{"level":"info","ts":"2025-09-30T09:29:24.256Z","msg":"Current reachable members","component":"service-resolver","service":"frontend","addresses":["172.19.0.2:7233"],"logging-call-at":"service_resolver.go:275"}

{"level":"info","ts":"2025-09-30T09:29:24.256Z","msg":"Frontend is now healthy","service":"frontend","logging-call-at":"workflow_handler.go:227"}

{"level":"info","ts":"2025-09-30T09:29:24.256Z","msg":"Current reachable members","component":"service-resolver","service":"frontend","addresses":["172.19.0.2:7233"],"logging-call-at":"service_resolver.go:275"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"Range updated for shardID","shard-id":1,"address":"172.19.0.2:7234","shard-range-id":2,"previous-shard-range-id":1,"logging-call-at":"context_impl.go:1190"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"Task key range updated","shard-id":1,"address":"172.19.0.2:7234","number":2097152,"next-number":3145728,"logging-call-at":"task_key_generator.go:177"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"Acquired shard","shard-id":1,"address":"172.19.0.2:7234","logging-call-at":"context_impl.go:1930"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"none","shard-id":1,"address":"172.19.0.2:7234","lifecycle":"Starting","component":"shard-engine","logging-call-at":"context_impl.go:1419"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"none","shard-id":1,"address":"172.19.0.2:7234","component":"history-engine","lifecycle":"Starting","logging-call-at":"history_engine.go:308"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"none","shard-id":1,"address":"172.19.0.2:7234","component":"visibility-queue-processor","lifecycle":"Starting","logging-call-at":"queue_immediate.go:112"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"Task rescheduler started.","shard-id":1,"address":"172.19.0.2:7234","component":"visibility-queue-processor","lifecycle":"Started","logging-call-at":"rescheduler.go:127"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"none","shard-id":1,"address":"172.19.0.2:7234","component":"visibility-queue-processor","lifecycle":"Started","logging-call-at":"queue_immediate.go:121"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"none","shard-id":1,"address":"172.19.0.2:7234","component":"timer-queue-processor","lifecycle":"Starting","logging-call-at":"queue_scheduled.go:155"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"Task rescheduler started.","shard-id":1,"address":"172.19.0.2:7234","component":"timer-queue-processor","lifecycle":"Started","logging-call-at":"rescheduler.go:127"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"none","shard-id":1,"address":"172.19.0.2:7234","component":"timer-queue-processor","lifecycle":"Started","logging-call-at":"queue_scheduled.go:164"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"none","shard-id":1,"address":"172.19.0.2:7234","component":"transfer-queue-processor","lifecycle":"Starting","logging-call-at":"queue_immediate.go:112"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"Task rescheduler started.","shard-id":1,"address":"172.19.0.2:7234","component":"transfer-queue-processor","lifecycle":"Started","logging-call-at":"rescheduler.go:127"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"queue reader started","shard-id":1,"address":"172.19.0.2:7234","component":"visibility-queue-processor","queue-reader-id":0,"lifecycle":"Started","logging-call-at":"reader.go:182"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"none","shard-id":1,"address":"172.19.0.2:7234","component":"transfer-queue-processor","lifecycle":"Started","logging-call-at":"queue_immediate.go:121"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"none","shard-id":1,"address":"172.19.0.2:7234","component":"archival-queue-processor","lifecycle":"Starting","logging-call-at":"queue_scheduled.go:155"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"Task rescheduler started.","shard-id":1,"address":"172.19.0.2:7234","component":"archival-queue-processor","lifecycle":"Started","logging-call-at":"rescheduler.go:127"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"none","shard-id":1,"address":"172.19.0.2:7234","component":"archival-queue-processor","lifecycle":"Started","logging-call-at":"queue_scheduled.go:164"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"none","service":"history","component":"memory-scheduled-queue-processor","lifecycle":"Starting","logging-call-at":"memory_scheduled_queue.go:103"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"none","service":"history","component":"memory-scheduled-queue-processor","lifecycle":"Started","logging-call-at":"memory_scheduled_queue.go:108"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"none","shard-id":1,"address":"172.19.0.2:7234","component":"history-engine","lifecycle":"Started","logging-call-at":"history_engine.go:317"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"none","shard-id":1,"address":"172.19.0.2:7234","lifecycle":"Started","component":"shard-engine","logging-call-at":"context_impl.go:1422"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"queue reader started","shard-id":1,"address":"172.19.0.2:7234","component":"archival-queue-processor","queue-reader-id":0,"lifecycle":"Started","logging-call-at":"reader.go:182"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"queue reader started","shard-id":1,"address":"172.19.0.2:7234","component":"transfer-queue-processor","queue-reader-id":0,"lifecycle":"Started","logging-call-at":"reader.go:182"}

{"level":"info","ts":"2025-09-30T09:29:24.262Z","msg":"queue reader started","shard-id":1,"address":"172.19.0.2:7234","component":"timer-queue-processor","queue-reader-id":0,"lifecycle":"Started","logging-call-at":"reader.go:182"}

{"level":"info","ts":"2025-09-30T09:29:24.277Z","msg":"Membership heartbeat upserted successfully","address":"172.19.0.2","port":6939,"hostId":"f3cfd813-9ddf-11f0-adc1-a6a295d3aa2b","logging-call-at":"monitor.go:306"}

{"level":"info","ts":"2025-09-30T09:29:24.291Z","msg":"Current reachable members","component":"service-resolver","service":"history","addresses":["172.19.0.2:7234"],"logging-call-at":"service_resolver.go:275"}

{"level":"info","ts":"2025-09-30T09:29:24.291Z","msg":"Current reachable members","component":"service-resolver","service":"frontend","addresses":["172.19.0.2:7233"],"logging-call-at":"service_resolver.go:275"}

{"level":"info","ts":"2025-09-30T09:29:24.291Z","msg":"Current reachable members","component":"service-resolver","service":"matching","addresses":["172.19.0.2:7235"],"logging-call-at":"service_resolver.go:275"}

{"level":"info","ts":"2025-09-30T09:29:24.292Z","msg":"Range updated for shardID","shard-id":3,"address":"172.19.0.2:7234","shard-range-id":2,"previous-shard-range-id":1,"logging-call-at":"context_impl.go:1190"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"Task key range updated","shard-id":3,"address":"172.19.0.2:7234","number":2097152,"next-number":3145728,"logging-call-at":"task_key_generator.go:177"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"Acquired shard","shard-id":3,"address":"172.19.0.2:7234","logging-call-at":"context_impl.go:1930"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"none","shard-id":3,"address":"172.19.0.2:7234","lifecycle":"Starting","component":"shard-engine","logging-call-at":"context_impl.go:1419"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"none","shard-id":3,"address":"172.19.0.2:7234","component":"history-engine","lifecycle":"Starting","logging-call-at":"history_engine.go:308"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"none","shard-id":3,"address":"172.19.0.2:7234","component":"visibility-queue-processor","lifecycle":"Starting","logging-call-at":"queue_immediate.go:112"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"Task rescheduler started.","shard-id":3,"address":"172.19.0.2:7234","component":"visibility-queue-processor","lifecycle":"Started","logging-call-at":"rescheduler.go:127"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"none","shard-id":3,"address":"172.19.0.2:7234","component":"visibility-queue-processor","lifecycle":"Started","logging-call-at":"queue_immediate.go:121"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"none","shard-id":3,"address":"172.19.0.2:7234","component":"timer-queue-processor","lifecycle":"Starting","logging-call-at":"queue_scheduled.go:155"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"Task rescheduler started.","shard-id":3,"address":"172.19.0.2:7234","component":"timer-queue-processor","lifecycle":"Started","logging-call-at":"rescheduler.go:127"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"none","shard-id":3,"address":"172.19.0.2:7234","component":"timer-queue-processor","lifecycle":"Started","logging-call-at":"queue_scheduled.go:164"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"none","shard-id":3,"address":"172.19.0.2:7234","component":"transfer-queue-processor","lifecycle":"Starting","logging-call-at":"queue_immediate.go:112"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"Task rescheduler started.","shard-id":3,"address":"172.19.0.2:7234","component":"transfer-queue-processor","lifecycle":"Started","logging-call-at":"rescheduler.go:127"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"none","shard-id":3,"address":"172.19.0.2:7234","component":"transfer-queue-processor","lifecycle":"Started","logging-call-at":"queue_immediate.go:121"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"none","shard-id":3,"address":"172.19.0.2:7234","component":"archival-queue-processor","lifecycle":"Starting","logging-call-at":"queue_scheduled.go:155"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"Task rescheduler started.","shard-id":3,"address":"172.19.0.2:7234","component":"archival-queue-processor","lifecycle":"Started","logging-call-at":"rescheduler.go:127"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"none","shard-id":3,"address":"172.19.0.2:7234","component":"archival-queue-processor","lifecycle":"Started","logging-call-at":"queue_scheduled.go:164"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"none","service":"history","component":"memory-scheduled-queue-processor","lifecycle":"Starting","logging-call-at":"memory_scheduled_queue.go:103"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"none","service":"history","component":"memory-scheduled-queue-processor","lifecycle":"Started","logging-call-at":"memory_scheduled_queue.go:108"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"none","shard-id":3,"address":"172.19.0.2:7234","component":"history-engine","lifecycle":"Started","logging-call-at":"history_engine.go:317"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"none","shard-id":3,"address":"172.19.0.2:7234","lifecycle":"Started","component":"shard-engine","logging-call-at":"context_impl.go:1422"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"queue reader started","shard-id":3,"address":"172.19.0.2:7234","component":"visibility-queue-processor","queue-reader-id":0,"lifecycle":"Started","logging-call-at":"reader.go:182"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"queue reader started","shard-id":3,"address":"172.19.0.2:7234","component":"archival-queue-processor","queue-reader-id":0,"lifecycle":"Started","logging-call-at":"reader.go:182"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"queue reader started","shard-id":3,"address":"172.19.0.2:7234","component":"timer-queue-processor","queue-reader-id":0,"lifecycle":"Started","logging-call-at":"reader.go:182"}

{"level":"info","ts":"2025-09-30T09:29:24.293Z","msg":"queue reader started","shard-id":3,"address":"172.19.0.2:7234","component":"transfer-queue-processor","queue-reader-id":0,"lifecycle":"Started","logging-call-at":"reader.go:182"}

{"level":"info","ts":"2025-09-30T09:29:24.294Z","msg":"bootstrap hosts fetched","bootstrap-hostports":"172.19.0.2:6934,172.19.0.2:6935,172.19.0.2:6933,172.19.0.2:6939","logging-call-at":"monitor.go:348"}

{"level":"info","ts":"2025-09-30T09:29:24.295Z","msg":"Current reachable members","component":"service-resolver","service":"matching","addresses":["172.19.0.2:7235"],"logging-call-at":"service_resolver.go:275"}

{"level":"info","ts":"2025-09-30T09:29:24.296Z","msg":"Current reachable members","component":"service-resolver","service":"history","addresses":["172.19.0.2:7234"],"logging-call-at":"service_resolver.go:275"}

{"level":"info","ts":"2025-09-30T09:29:24.296Z","msg":"Current reachable members","component":"service-resolver","service":"worker","addresses":["172.19.0.2:7239"],"logging-call-at":"service_resolver.go:275"}

{"level":"info","ts":"2025-09-30T09:29:24.296Z","msg":"Current reachable members","component":"service-resolver","service":"frontend","addresses":["172.19.0.2:7233"],"logging-call-at":"service_resolver.go:275"}

{"level":"info","ts":"2025-09-30T09:29:24.296Z","msg":"Current reachable members","component":"service-resolver","service":"matching","addresses":["172.19.0.2:7235"],"logging-call-at":"service_resolver.go:275"}

{"level":"info","ts":"2025-09-30T09:29:24.296Z","msg":"Current reachable members","component":"service-resolver","service":"worker","addresses":["172.19.0.2:7239"],"logging-call-at":"service_resolver.go:275"}

{"level":"info","ts":"2025-09-30T09:29:24.297Z","msg":"Range updated for shardID","shard-id":4,"address":"172.19.0.2:7234","shard-range-id":2,"previous-shard-range-id":1,"logging-call-at":"context_impl.go:1190"}

{"level":"info","ts":"2025-09-30T09:29:24.297Z","msg":"Task key range updated","shard-id":4,"address":"172.19.0.2:7234","number":2097152,"next-number":3145728,"logging-call-at":"task_key_generator.go:177"}

{"level":"info","ts":"2025-09-30T09:29:24.297Z","msg":"Acquired shard","shard-id":4,"address":"172.19.0.2:7234","logging-call-at":"context_impl.go:1930"}

{"level":"info","ts":"2025-09-30T09:29:24.297Z","msg":"none","shard-id":4,"address":"172.19.0.2:7234","lifecycle":"Starting","component":"shard-engine","logging-call-at":"context_impl.go:1419"}

{"level":"info","ts":"2025-09-30T09:29:24.298Z","msg":"none","shard-id":4,"address":"172.19.0.2:7234","component":"history-engine","lifecycle":"Starting","logging-call-at":"history_engine.go:308"}

{"level":"info","ts":"2025-09-30T09:29:24.298Z","msg":"none","shard-id":4,"address":"172.19.0.2:7234","component":"visibility-queue-processor","lifecycle":"Starting","logging-call-at":"queue_immediate.go:112"}

{"level":"info","ts":"2025-09-30T09:29:24.298Z","msg":"Task rescheduler started.","shard-id":4,"address":"172.19.0.2:7234","component":"visibility-queue-processor","lifecycle":"Started","logging-call-at":"rescheduler.go:127"}

{"level":"info","ts":"2025-09-30T09:29:24.298Z","msg":"none","shard-id":4,"address":"172.19.0.2:7234","component":"visibility-queue-processor","lifecycle":"Started","logging-call-at":"queue_immediate.go:121"}

{"level":"info","ts":"2025-09-30T09:29:24.298Z","msg":"none","shard-id":4,"address":"172.19.0.2:7234","component":"timer-queue-processor","lifecycle":"Starting","logging-call-at":"queue_scheduled.go:155"}

{"level":"info","ts":"2025-09-30T09:29:24.298Z","msg":"Task rescheduler started.","shard-id":4,"address":"172.19.0.2:7234","component":"timer-queue-processor","lifecycle":"Started","logging-call-at":"rescheduler.go:127"}

{"level":"info","ts":"2025-09-30T09:29:24.298Z","msg":"none","shard-id":4,"address":"172.19.0.2:7234","component":"timer-queue-processor","lifecycle":"Started","logging-call-at":"queue_scheduled.go:164"}

{"level":"info","ts":"2025-09-30T09:29:24.298Z","msg":"none","shard-id":4,"address":"172.19.0.2:7234","component":"transfer-queue-processor","lifecycle":"Starting","logging-call-at":"queue_immediate.go:112"}

{"level":"info","ts":"2025-09-30T09:29:24.298Z","msg":"Task rescheduler started.","shard-id":4,"address":"172.19.0.2:7234","component":"transfer-queue-processor","lifecycle":"Started","logging-call-at":"rescheduler.go:127"}

{"level":"info","ts":"2025-09-30T09:29:24.298Z","msg":"none","shard-id":4,"address":"172.19.0.2:7234","component":"transfer-queue-processor","lifecycle":"Started","logging-call-at":"queue_immediate.go:121"}

{"level":"info","ts":"2025-09-30T09:29:24.298Z","msg":"none","shard-id":4,"address":"172.19.0.2:7234","component":"archival-queue-processor","lifecycle":"Starting","logging-call-at":"queue_scheduled.go:155"}

{"level":"info","ts":"2025-09-30T09:29:24.298Z","msg":"Task rescheduler started.","shard-id":4,"address":"172.19.0.2:7234","component":"archival-queue-processor","lifecycle":"Started","logging-call-at":"rescheduler.go:127"}

{"level":"info","ts":"2025-09-30T09:29:24.298Z","msg":"none","shard-id":4,"address":"172.19.0.2:7234","component":"archival-queue-processor","lifecycle":"Started","logging-call-at":"queue_scheduled.go:164"}

{"level":"info","ts":"2025-09-30T09:29:24.298Z","msg":"none","service":"history","component":"memory-scheduled-queue-processor","lifecycle":"Starting","logging-call-at":"memory_scheduled_queue.go:103"}